Stream with HTTP Requests¶

In this guide, you'll learn how to use the Tinybird Events API to ingest thousands of JSON messages per second with HTTP Requests.

For more details about the Events API endpoint, read the Events API docs.

Setup: Create the target Data Source¶

Firstly, you need to create an NDJSON Data Source. You can use the API, or simply drag & drop a file on the UI.

Even though you can add new columns later on, you have to upload an initial file. The Data Source will be created and ordered based upon those initial values.

As an example, upload this NDJSON file:

{"date": "2020-04-05 00:05:38", "city": "New York"}

Ingest from the browser: JavaScript¶

Ingesting from the browser requires making a standard POST request; see below for an example. Input your own Token and change the name of the target Data Source to the one you created. Check your URL (const url) is the corresponding URL for your region.

Browser High-Frequency Ingest

async function sendEvents(events){

const date = new Date();

events.forEach(ev => {

ev.date = date.toISOString()

});

const headers = {

'Authorization': 'Bearer TOKEN_HERE',

};

const url = 'https://api.tinybird.co/' // you may be on a different host

const rawResponse = await fetch(`${url}v0/events?name=hfi_multiple_events_js`, {

method: 'POST',

body: events.map(JSON.stringify).join('\n'),

headers: headers,

});

const content = await rawResponse.json();

console.log(content);

}

sendEvents([

{ 'city': 'Jamaica', 'action': 'view'},

{ 'city': 'Jamaica', 'action': 'click'},

]);

Remember: Publishing your Admin Token on a public website is a security vulnerability. It is highly recommend that you create a new Token that restricts access granularity.

Ingest from the backend: Python¶

Ingesting from the backend is a similar process to ingesting from the browser. Use the following Python snippet and replace the Auth Token and Data Source name, as in the example above.

Python High-Frequency Ingest

import requests

import json

import datetime

def send_events(events):

params = {

'name': 'hfi_multiple_events_py',

'token': 'TOKEN_HERE',

}

for ev in events:

ev['date'] = datetime.datetime.now().isoformat()

data = '\n'.join([json.dumps(ev) for ev in events])

r = requests.post('https://api.tinybird.co/v0/events', params=params, data=data)

print(r.status_code)

print(r.text)

send_events([

{'city': 'Pretoria', 'action': 'view'},

{'city': 'Pretoria', 'action': 'click'},

])

Ingest from the command line: curl¶

The following curl snippet sends two events in the same request:

curl High-Frequency Ingest

curl -i -d $'{"date": "2020-04-05 00:05:38", "city": "Chicago"}\n{"date": "2020-04-05 00:07:22", "city": "Madrid"}\n' -H "Authorization: Bearer $TOKEN" 'https://api.tinybird.co/v0/events?name=hfi_test'

Add new columns from the UI¶

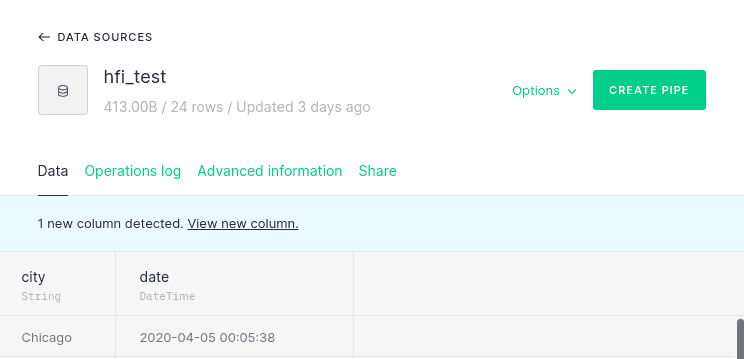

As you add extra information in the form of new JSON fields, the UI will prompt you to include those new columns on the Data Source.

For instance, if you send a new event with an extra field:

curl High-Frequency Ingest

curl -i -d '{"date": "2020-04-05 00:05:38", "city": "Chicago", "country": "US"}' -H "Authorization: Bearer $TOKEN" 'https://api.tinybird.co/v0/events?name=hfi_test'

And navigate to the UI's Data Source screen, you'll be asked if you want to add the new column:

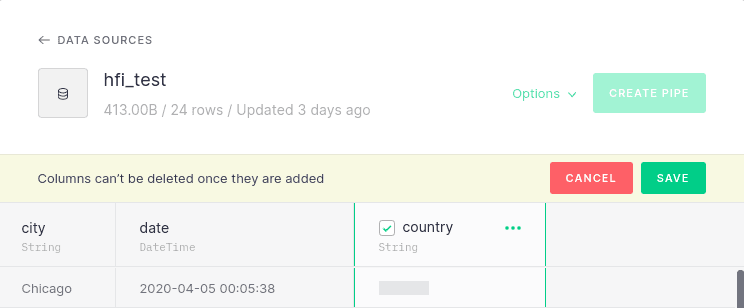

Here, you'll be able to select the desired columns and adjust the types:

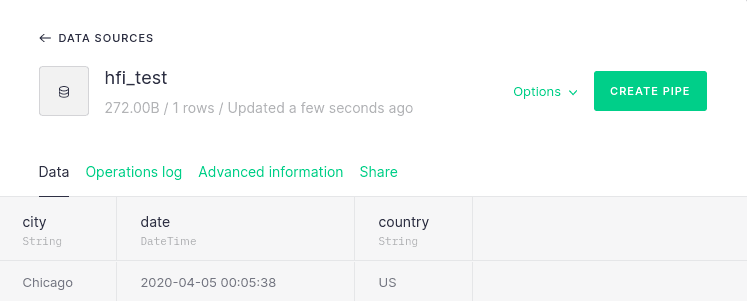

After you confirm the addition of the column, it will be populated by new events:

Error handling and retries¶

Read more about the possible responses returned by the Events API.

When using the Events API to send data to Tinybird, you can choose to 'fire and forget' by sending a POST request and ignoring the response. This is a common choice for non-critical data, such as tracking page hits if you're building Web Analytics, where some level of loss is acceptable.

However, if you're sending data where you cannot tolerate events being missed, you must implement some error handling & retry logic in your application.

Wait for acknowledgement¶

When you send data to the Events API, you'll usually receive a HTTP202 response, which indicates that the request was successful. However, it's important to note that this response only indicates that the Events API successfully accepted your HTTP request. It does not confirm that the data has been committed into the underlying database.

Using the wait parameter with your request will ask the Events API to wait for acknowledgement that the data you sent has been committed into the underlying database.

If you use the wait parameter, you will receive a HTTP200 response that confirms data has been committed.

To use this, your Events API request should include wait as a query parameter, with a value of true.

For example:

https://api.tinybird.co/v0/events?wait=true

It is good practice to log your requests to, and responses from, the Events API. This will help give you visibility into any failures for reporting or recovery.

When to retry¶

Failures are indicated by a HTTP4xx or HTTP5xx response.

It's recommended to only implement automatic retries for HTTP5xx responses, which indicate that a retry might be successful.

HTTP4xx responses should be logged and investigated, as they often indicate issues that cannot be resolved by simply retrying with the same request.

For HTTP2 clients, you may receive the 0x07 GOAWAY error. This indicates that there are too many alive connections. It is safe to recreate the connection and retry these errors.

How to retry¶

You should aim to retry any requests that fail with a HTTP5xx response.

In general, you should retry these requests 3-5 times. If the failure persists beyond these retries, log the failure, and attempt to store the data in a buffer to resend later (for example, in Kafka, or a file in S3).

It's recommended to use an exponential backoff between retries. This means that, after a retry fails, you should increase the amount of time you wait before sending the next retry. If the issue causing the failure is transient, this gives you a better chance of a successful retry.

Be careful when calculating backoff timings, so that you do not run into memory limits on your application.

Next steps¶

- Learn more about the schema and why it's important.

- Ingested your data and ready to go? Start querying your Data Sources and build some Pipes!