Migrate from DoubleCloud¶

In this guide, you'll learn how to migrate from DoubleCloud to Tinybird, and the overview of how to quickly & safely recreate your setup.

DoubleCloud, a managed data services platform that offers ClickHouse® as a service, has shut down operations. As of October 1, 2024 you can't create new DoubleCloud accounts, and all existing DoubleCloud services must be migrated by March 1, 2025.

Tinybird offers a solution that can be a suitable alternative for existing users of DoubleCloud's ClickHouse service.

Follow this guide to learn two approaches for migrating data from your DoubleCloud instance to Tinybird:

- Option 1: Use the S3 table function to export data from DoubleCloud Managed ClickHouse to Amazon S3, then use the Tinybird S3 Connector to import data from S3.

- Option 2: Export your ClickHouse tables locally, then import files into Tinybird using the Datasources API.

Wondering how to create a Tinybird account? It's free! Start here. Need DoubleCloud migration assistance? Please contact us.

Prerequisites¶

You don't need an active Tinybird Workspace to read through this guide, but it's good idea to understand the foundational concepts and how Tinybird integrates with your team.

At a high level¶

Tinybird is a great alternative to DoubleCloud's managed ClickHouse implementation.

Tinybird is a data platform built for data and engineering teams to solve complex real-time, operational, and user-facing analytics use cases at any scale, with end-to-end latency in milliseconds for streaming ingest and high QPS workloads.

It offers the same or comparable performance as DoubleCloud, with additional features such as native, managed ingest connectors, multi-node SQL notebooks, and scalable REST APIs for public use or secured with JWTs.

Tinybird is a managed platform that scales transparently, requiring no cluster operations, shard management or worrying about replicas.

See how Tinybird is used by industry-leading companies today in the Customer Stories.

Migrate from DoubleCloud to Tinybird using Amazon S3¶

In this approach, you'll use the s3 table function to export tables to an Amazon S3 bucket, and then import them into Tinybird with the S3 Connector.

This guide assumes that you already have the necessary IAM Roles with the necessary permissions to write to (from DoubleCloud) and read from (to Tinybird) the S3 bucket.

Export your table to Amazon S3¶

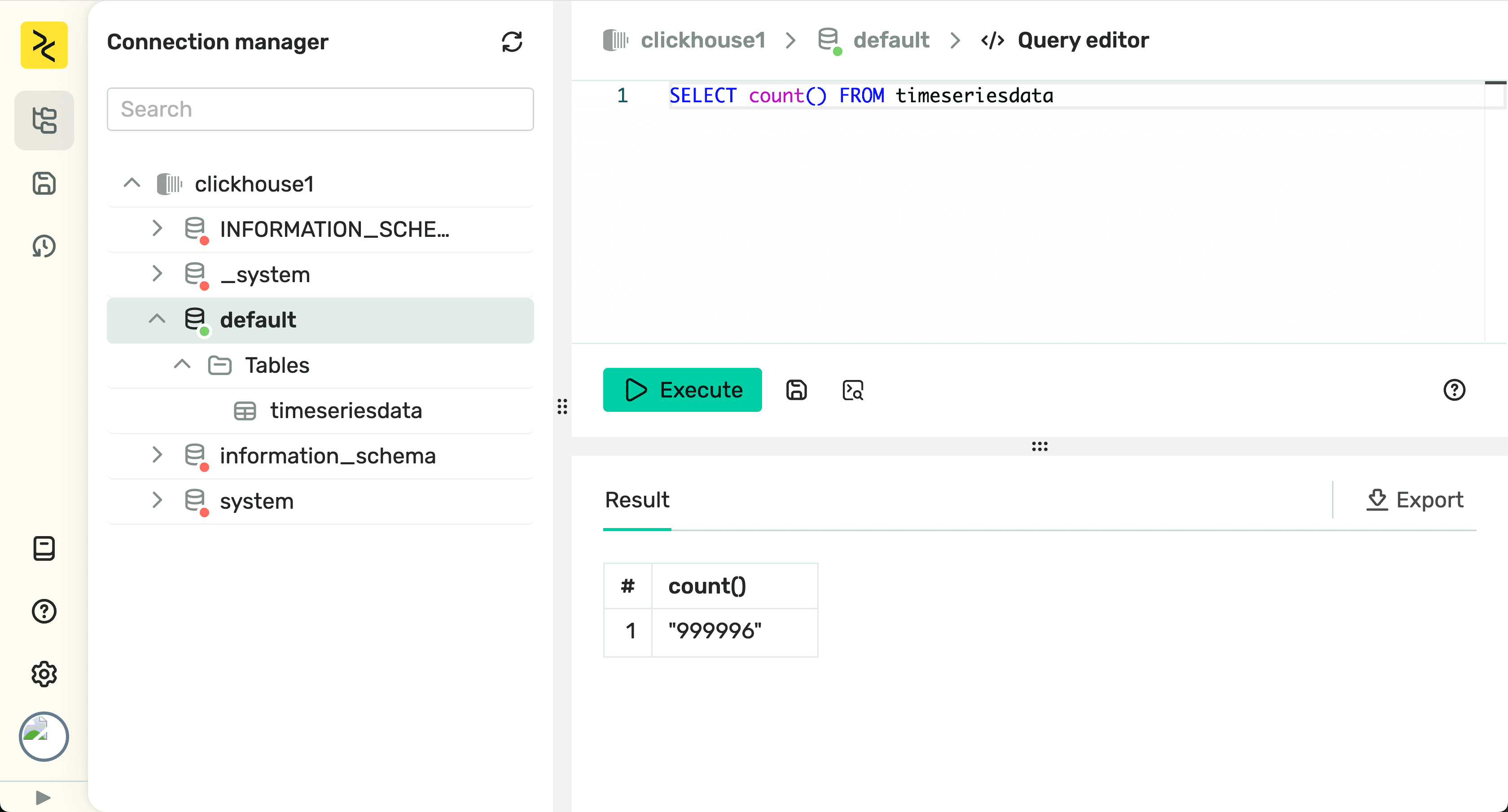

In this guide, we're using a table on our DoubleCloud ClickHouse Cluster called timeseriesdata. The data has 3 columns and 1M rows.

Export your table to Amazon S3 In this guide, we're using a table on our DoubleCloud ClickHouse Cluster called timeseriesdata. The data has 3 columns and 1M rows.

You can export data in your DoubleCloud ClickHouse tables to Amazon S3 with the s3 table function.

Note: If you don't want to expose your AWS credentials in the query, use a named collection.

INSERT INTO FUNCTION s3(

'https://tmp-doublecloud-migration.s3.us-east-1.amazonaws.com/exports/timeseriesdata.csv',

'AWS_ACCESS_KEY_ID',

'AWS_SECRET_ACCESS_KEY',

'CSV'

)

SELECT *

FROM timeseriesdata

SETTINGS s3_create_new_file_on_insert = 1

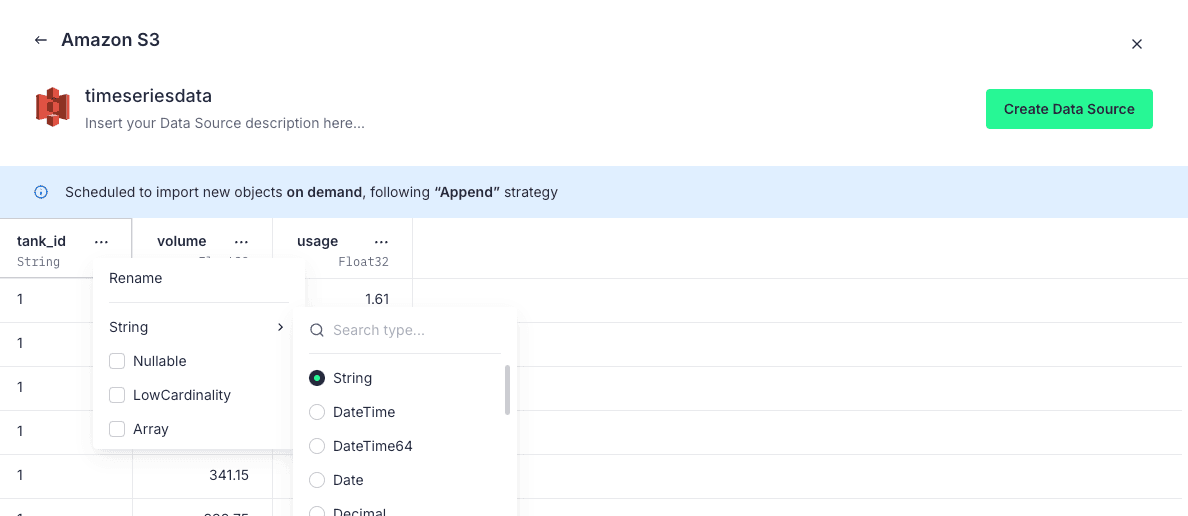

Import to Tinybird with the S3 Connector¶

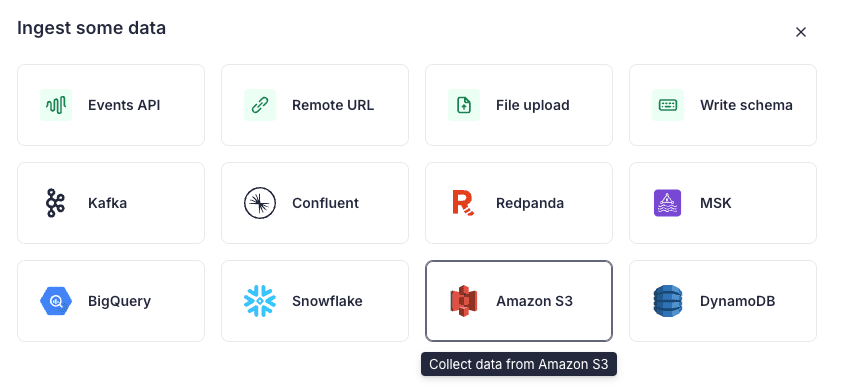

Once your table is exported to Amazon S3, import it to Tinybird using the Amazon S3 Connector.

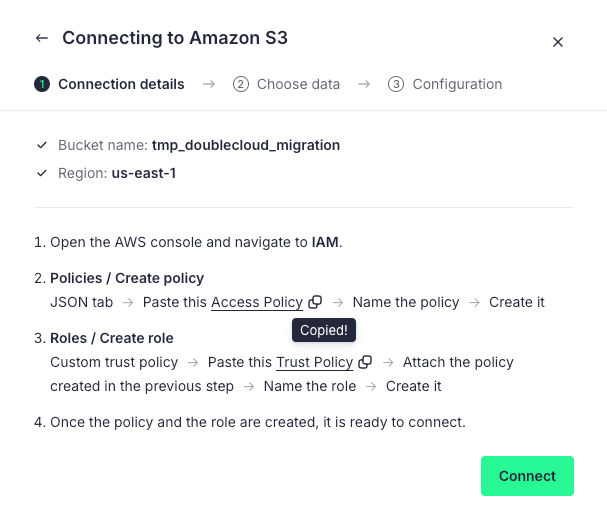

The basic steps for using the S3 Connector are:

- Define an S3 Connection with IAM Policy and Role that allow Tinybird to read from S3. Tinybird will automatically generated the JSON for these policies.

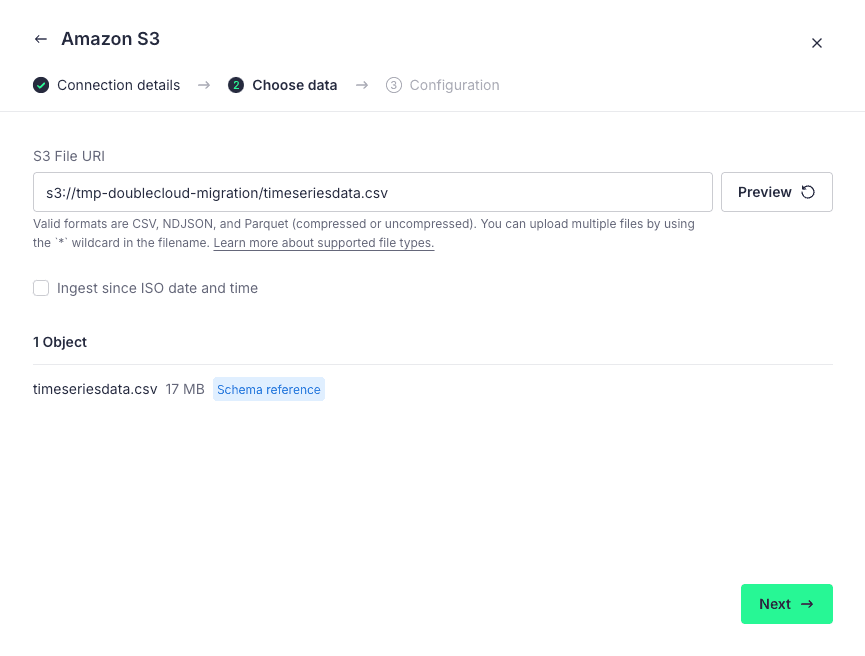

- Supply the file URI (with wildcards as necessary) to define the file(s) containing the contents of your ClickHouse table(s).

- Create an On Demand (one-time) sync.

- Define the schema of the resulting table in Tinybird. You can do this within the S3 Connector UI…

...or by creating a .datasource file and pushing it to Tinybird. An example .datasource file for timeseriesdata table to match the DoubleCloud schema and create the import job from the existing S3 Connection would look like this:

SCHEMA >

`tank_id` String,

`volume` Float32,

`usage` Float32

ENGINE "MergeTree"

ENGINE_SORTING_KEY "tank_id"

IMPORT_SERVICE 's3_iamrole'

IMPORT_CONNECTION_NAME 'DoubleCloudS3'

IMPORT_BUCKET_URI 's3://tmp-doublecloud-migration/timeseriesdata.csv'

IMPORT_STRATEGY 'append'

IMPORT_SCHEDULE '@on-demand'

- Tinybird will then create and run a batch import job to ingest the data from Amazon S3 and create a new table that matches your table in DoubleCloud. You can monitor the job from the

datasource_ops_logService Data Source.

Migrate from DoubleCloud to Tinybird using local exports¶

Depending on the size of your tables, you might be able to simply export your tables to a local file using clickhouse-client and ingest them to Tinybird directly.

Export your tables from DoubleCloud using clickhouse-client¶

First, use clickhouse-client to export your tables into local files. Depending on the size of your data, you can choose to compress as necessary. Tinybird can ingest CSV (including Gzipped CSV), NDJSON, and Parquet files.

./clickhouse client --host your_doublecloud_host --port 9440 --secure --user your_doublecloud_user --password your_doublecloud_password --query "SELECT * FROM timeseriesdata" --format CSV > timeseriesdata.csv

Import your files to Tinybird¶

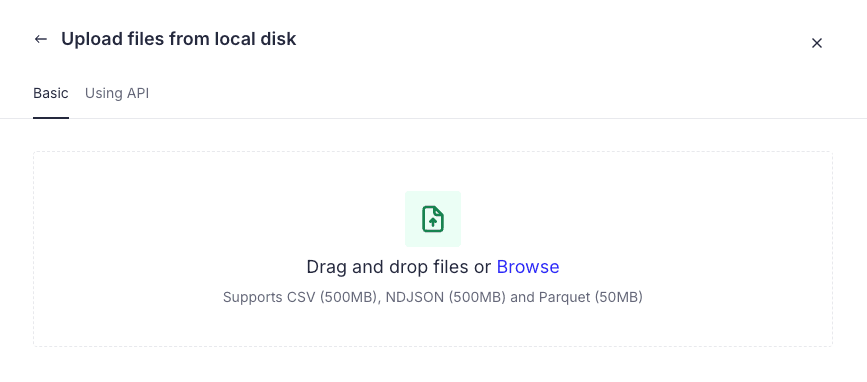

You can drag and drop files into the Tinybird UI…

or upload them using the Tinybird CLI:

tb datasource generate timeseriesdata.csv tb push datasources/timeseriesdata.datasource tb datasource append timeseriesdata timeseriesdata.csv

Note that Tinybird will automatically infer the appropriate schema from the supplied file, but you may need to change the column names, data types, table engine, and sorting key to match your table in DoubleCloud.

Migration support¶

If your migration is more complex, involving many or very large tables, materialized views + populates, or other complex logic, please contact us and we will assist with your migration.

Tinybird Pricing vs DoubleCloud¶

Tinybird's Free plan is free, with no time limit or credit card required. The Free plan includes 10 GB of data storage (compressed) and 1,000 published API requests per day.

Tinybird's paid plans are available with both infrastructure-based pricing and usage-based pricing. DoubleCloud customers will likely be more familiar with infrastructure-based pricing.

For more information about infrastructure-based pricing and to get a quote based on your existing DoubleCloud cluster, contact us.

If you are interested in usage-based pricing, you can learn more about usage-based billing here.

ClickHouse Limits¶

Note that Tinybird takes a different approach to ClickHouse deployment than DoubleCloud. Rather than provide a full interface to a hosted ClickHouse cluster, Tinybird provides a serverless ClickHouse implementation and abstracts the database interface via our APIs, UI, and CLI, only exposing the SQL Editor within our Pipes interface.

Additionally, not all ClickHouse SQL functions, data types, and table engines are supported out of the box. You can find a full list of supported engines and settings here. If your use case requires engines or settings that aren't listed, please contact us.

Useful resources¶

Migrating to a new tool, especially at speed, can be challenging. Here are some helpful resources to get started on Tinybird:

- Read how Tinybird compares to ClickHouse (especially ClickHouse Cloud).

- Read how Tinybird compares to other Managed ClickHouse offerings.

- Join our Slack Community for help understanding Tinybird concepts.

- Contact us for migration assistance.

Next steps¶

If you'd like assistance with your migration, contact us.

- Set up a free Tinybird account and build a working prototype: Sign up here.

- Run through a quick example with your free account: Tinybird quick start.

- Read the billing docs to understand plans and pricing on Tinybird.