I'm impressed with DynamoDB.

Amazon's NoSQL workhorse makes one simple promise: "No matter how big your data gets, I will always be fast."

Like, milliseconds fast. Even with billions of items. That's why it's so popular.

But I, like many, was raised in PostgreSQLand, where all the good folk speak Structured Query Language (SQL).

I like SQL's mental model. There's something uniquely intuitive about the declarative nature of SELECT … FROM … WHERE. I think that's why SQL is not yet dead.

If you're here because you want to query your DynamoDB tables with SQL, I've got you covered. Keep reading to learn how.

But first, let's be clear. DynamoDB is a NoSQL database. It stores data non-relationally. It's schemaless and designed to support data ingestion without structure. Using SQL (literally, "Structured Query Language") to query an unstructured table in DynamoDB doesn't make sense.

There are bad reasons to query DynamoDB with SQL. One such reason is that SQL is just more comfortable for you than DynamoDB's API. If that's the case, then maybe DynamoDB just isn't the right database for you. Maybe you'd be better off using a structured, relational database like RDS or Aurora.

That said, there are good reasons to query DynamoDB tables with SQL. Most of them involve running analytics on DynamoDB: performing SQL operations like aggregating, filtering, and joining that aren't natively supported via DynamoDB's API.

DynamoDB wasn't built for analytics, but that doesn't mean you can't run analytical queries on data from DynamoDB tables. You just need the right tools, and those tools generally use SQL.

Does DynamoDB support SQL at all?

No. DynamoDB is a pure NoSQL database on AWS optimized for key-value lookups. It does not natively support any SQL statements.

That said, you can query DynamoDB with SQL to some extent with PartiQL, an open source SQL-compatible query language maintained by engineers at Amazon Web Services.

But even PartiQL is limited here. Only a small subset of statements and operations work with DynamoDB. Just a few of the most notable limitations:

- No aggregate functions. You can't use

SUM,COUNT,AVERAGE, or any other aggregating SQL statements with PartiQL on DynamoDB. - Query only one table at a time. You can only query one DynamoDB table per PartiQL query, so joins, subqueries, and CTEs aren't available.

- No support for GSIs: PartiQL isn't smart enough to select from a secondary index when the

WHEREclause filters over the primary key said index. You have to directly specify the secondary index in theFROMclause to get that benefit, which adds some mental overhead. - Consumes RCUs: PartiQL queries aren't free. They consume DynamoDB read capacity units (RCUs) just like every other read operation.

PartiQL might seem like a nice dev-friendly way to minimize the verbosity of DynamoDB's API, but aside from its limited SQL support, PartiQL can be dangerous to rely on.

So how do you query DynamoDB?

DynamoDB supports three different read types: GetItem, Query, and Scan.

GetItem is DynamoDB's bread and butter. Given a primary key, DynamoDB can fetch a single record from a massive table in milliseconds or less. BatchGetItem works similarly, for up 100 items across multiple partitions.

DynamoDB also supports a Query statement, which can be useful for grabbing a limited number of items within the same partition. You can Query with a partition key to fetch all the items in a partition and use the sort key to filter your read. Add secondary indexes to the mix and you can pretty efficiently retrieve small ranges of items with a decent amount of flexibility on your filter key.

DynamoDB also has a Scan statement, but tread lightly. Scan does what it says it will: It scans and retrieves every single item in a DynamoDB table. You can filter the results, but the filtering happens after read, not before. You typically reserve Scan for migrations, otherwise your RCUs dry up in a hurry.

As you can see, DynamoDB's read patterns are generally transactional. They're designed to fetch one or a few items from the database.

SQL also supports fetching items from a database via SELECT statements (and WHERE filters). But SQL isn't a purely transactional language: It also includes functions for aggregating (SUM, COUNT, AVERAGE, etc.) and joining tables together (JOIN, INNER JOIN, etc.). If you want to apply these functions to DynamoDB tables - typically for analytics use cases - SQL would be better, right?

How to query DynamoDB tables with SQL

The key to querying DynamoDB with SQL is to not use DynamoDB 😉. Rather, copy your tables into another database that supports SQL. That's really the only way to do it.

There are varying technologies you can use here. Amazon Redshift comes to mind, and it's relatively easy to get data from DynamoDB to Redshift. If you came here to use SQL to run ad hoc/BI queries on DynamoDB tables, Redshift will suit you just fine.

Amazon Athena is another option. You could dump data from a DynamoDB Stream into S3 using Kinesis Firehose, and run a Glue crawler to catalog the data in S3 for querying in Athena.

You could also use RDS or Aurora, but that sort of defeats the purpose of using DynamoDB in the first place. You likely chose DynamoDB as a transactional database because you had certain low latency or high concurrency requirements that RDS and Aurora couldn't scale to support. Still, you could use these databases to run some light analytical queries with SQL. I'm not sure why you would, but you could.

All of these approaches, while feasible, have something in common: they're slow. Redshift and Athena can handle long-running, complex SQL queries, but you'll never achieve anything close to the read performance you get in your transactional workloads on DynamoDB. AWS-flavored Postgres won't support complex analytics with anything near acceptable cost or performance.

Going back to the simple promise that DynamoDB makes (and why you chose it in the first place): It's fast. If you want your SQL queries to be similarly fast, you shouldn't use any of the above technologies.

Use Tinybird as a secondary index for DynamoDB to query with SQL

Tinybird is a real-time analytics platform with a full-featured SQL dialect based on open source ClickHouse. It's very fast, capable of achieving sub-second latencies for complex SQL queries over billions of rows of data.

Copying data from DynamoDB to Tinybird requires relatively little effort using the Tinybird DynamoDB connector, a managed ETL that keeps a stateful copy of your DynamoDB table in Tinybird with only a few seconds of lag at most.

You can see how the connector works in this short video:

In the context of DynamoDB, you can think of Tinybird almost like a global secondary index, but instead of another DynamoDB table with a different primary key, you have an entirely different database optimized for complex and performant SQL queries.

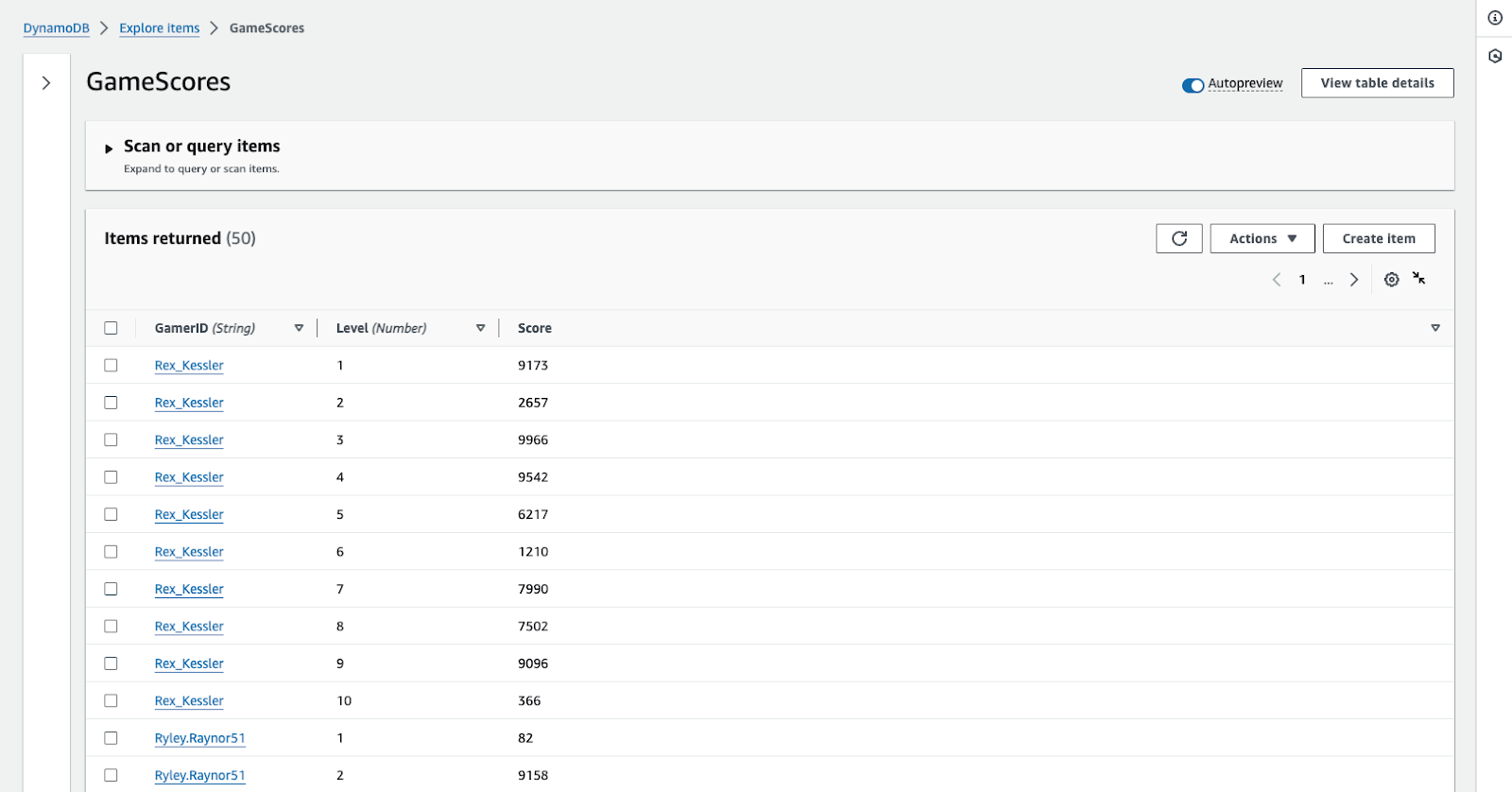

Consider a simple example DynamoDB table called GameScores with GamerID partition key and a Level sort key:

You could create a corresponding table in Tinybird with the following schema:

Then you could query this table using SQL to get the percentile ranking of a gamer's score on a particular level compared to all other gamers:

This is a simple example with a simple DynamoDB table, but the same principle applies no matter how complex your DynamoDB table. Tinybird even works well with single-table design in DynamoDB thanks to functions and data types that aid in handling unstructured and semi-structured data.

Aggregate DynamoDB data in Tinybird

Tinybird is a platform for analytics that uses a columnar storage format, so SQL aggregations in Tinybird are very performant. You can achieve sub-second aggregations on a specific key (column) even when your DynamoDB tables contain billions of items.

Compared to other DynamoDB aggregation patterns, Tinybird will likely be much more performant and much less brittle.

Enrich DynamoDB tables using JOINs

Another benefit of Tinybird is its support for SQL JOINs and its many integrations with other data sources. For example, if you have a data stream from Kinesis or some dimensional table in RDS or S3 and you want to enrich your DynamoDB tables, Tinybird can do that.

Something like the below is quite trivial in Tinybird:

Create SQL-based APIs that scale

Here's why I really love Tinybird for this use case: Not only can you use Tinybird to query DynamoDB tables with SQL, but you can also instantly publish any SQL query as a scalable REST API Endpoint.

Why does this matter?

Because you're probably using DynamoDB as a part of the backend for a real-time application that demands low-latency reads at high concurrency.

Tinybird gives you a peer to DynamoDB in that effort, achieving for analytics what DynamoDB achieves for transactions. With Tinybird APIs, you have a convenient way to integrate low-latency, high-concurrency SQL queries for analytics into your application

Why use Tinybird to query DynamoDB with SQL

Using Tinybird to query DynamoDB tables with SQL offers some nice perks:

- Full-featured SQL. Unlike PartiQL, Tinybird's SQL dialect is not limited to a small subset of SQL. Instead, it gives you a wide range of statements and functions to run highly complex SQL queries - including aggregations, filters, and joins.

- Doesn't consume DynamoDB capacity. Since the Tinybird DynamoDB connector uses native DynamoDB features in PITR and Streams, it doesn't consume any RCUs.

- Automatic deduplication. Anytime you're trying to use transactional data (think CRUD operations) in an event-driven architecture, you have to think about deduplication. Replaces and updates don't work in Tinybird like they do in DynamoDB. Every change is a new event, and thus a new row in the raw table. This will be true in other columnar databases, not just Tinybird. Fortunately, Tinybird uses a

ReplacingMergeTreeengine for DynamoDB ingestion, so CRUD operations are automatically deduplicated into a current state in the resulting table. - "Zero-ETL"-like experience. Tinybird's DynamoDB connector isn't technically a "Zero-ETL". Rather, it's a managed ETL that runs in the background so you don't have to worry about it. All you see is your DynamoDB table copied into an external database optimized for SQL-based analytics.

- Performance. Perhaps the most valuable benefit Tinybird offers is its performance characteristics. You likely use DynamoDB because of its performance: fast reads and writes regardless of scale. Tinybird will give you very similar performance characteristics even on very complex analytical SQL queries. So you can use the queries you build in Tinybird in the same application backed by your DynamoDB instance.

Common questions about using Tinybird to query DynamoDB tables with SQL

How does Tinybird handle DynamoDB's schemaless design?

DynamoDB is a NoSQL database that uses a schemaless storage format. Tinybird is a columnar database that stores data with a predefined schema using typed columns.

That said, Tinybird has pretty good built-in support for handling unstructured and semi-structured data. It's not uncommon to ingest all of the items from a DynamoDB table into a single raw JSON record, and then parse it downstream. Tinybird supports JSONExtract*() functions that simplify JSON parsing, and with Materialized Views you can "normalize" unstructured data into separate, structured tables in real time.

How much does it cost to use Tinybird?

Tinybird offers a time-unlimited free plan that includes the DynamoDB connector, up to 10 GB of storage (compressed), and 1,000 API requests per day for free. So if you want to kick the tires and try Tinybird for querying your DynamoDB tables with SQL, you can signup for free and start right now.

If your usage does exceed the free tier, you'll be charged based on usage: $0.07/GB of written/read data and $0.34/GB of stored, compressed data (Tinybird generally achieves compression rations of 3.5-10x).

Can I deploy Tinybird on AWS?

You can create Tinybird Workspaces on shared infrastructure in multiple AWS regions. Tinybirds also offers dedicated AWS clusters for Enterprise plans.

Can I use Tinybird with a DynamoDB single-table design?

Yes. Tinybird can handle normalizing data from DynamoDB even when a single-table design is used.

How fast is Tinybird?

Tinybird's performance with DynamoDB should be considered in terms of write speed and read speed. The end-to-end latency from PutItem in DynamoDB to API response from Tinybird is generally only a few seconds. When writing data from DynamoDB to Tinybird, data generally syncs within a few seconds or less. On the read side, even poorly optimized queries can achieve sub-second latencies even for very large datasets (millions of items or more). Across all shared infrastructure, Tinybird APIs achieve p95 response times of <100 ms.