In Product Development, “User Testing” is often easier to talk about than to actually do: it is hard to find the time to reach out to users or volunteers, to schedule the interviews, to prepare scripts, to gather notes and to review the outcome of the sessions with the teams.

However, watching people use your product is both humbling (often the feeling you get after watching users in action is “crap, it’s all wrong!”) and eye-opening: it really helps clarify what needs to improve and in what order, and it also highlights those things that don’t work at all and that you might need to re-think.

And beyond mere improvements, it really helps understand who your users are, how they think and what they need.

For these reasons, because it is hard and because it is worth it, making User Testing part of your normal development process sooner rather than later is a good idea. That is exactly what we are doing at Tinybird. We want to continuously validate our ideas, no matter how crazy those are, so we can build a fast qualitative product feedback loop.

Do you ever struggle querying and getting insights from large quantities of data? We know how you feel! Sign up to our Closed Beta & User Testing sessions, get a sneak peek at what we are building and help us with your feedback: https://t.co/wJecgoxoES

— Tinybird (@tinybirdco) July 1, 2019

At the beginning of June we reached out via our blog and via Twitter asking for volunteers, and this is what happened:

The numbers

- 32 people signed up in 36 hours. We closed the form because we didn’t feel we would be able to handle them all!

- 30 of them agreed to join our newsletter (just a ‘vanity metric’ but we are so incredibly grateful about it)

- Half of them signed-up to a remote session, half of them preferred to come in person. Some of these are people we know that were just keen to come and help out. We love you.

- 6 people canceled for various reasons. Two had just become parents! Others had last-minute reasons to cancel and some just wanted a demo.

- 11 haven’t yet committed to a specific date.

- 9 people completed the User Testing, 5 of them in an in-person workshop (1 of them was a walk-in), 4 of them remotely.

- 7 people are scheduled to join us in our next round of user testing at the end of August. We moved it for scheduling/staff reasons, but also it made sense to delay so that we would have time to implement some changes and validate those with them.

Although the idea that 5 to 8 testers are all you need to find 85% of the problems with your design is apparently a myth, we did get a wealth of feedback and a number of avenues to pursue through our initial 9 testers.

To understand the test, a bit about our Product

We aim to build the ideal product to query huge quantities of data through interactive applications in real-time. For that to happen, it needs to make it really easy to do three things:

- Ingesting huge quantities of structured data,

- enabling the capacity to query, transform and prepare such data for consumption, and,

- creating API endpoints that can be consumed securely by third parties.

All of this is possible through our UI and through our APIs, but with this particular user test we wanted to validate that our recently revamped UI was indeed making at least the basics of all of that easy to do.

The Answers We Sought

When setting out to perform a User Test, you have to be clear and explicit about what it is you actually want to validate. In our case, we designed the test to try to answer these questions:

- When given a URL of a CSV file, can users figure out how to import it easily? Is it clear enough that they can provide the URL directly rather than download the file and upload it?

- When asked to do some exploratory queries, do people use the automatically created Pipe or do they create a new one?

- When trying to clean-up data, do they realize they can create additional SQL queries to filter out the results of their previous query?

- Do they understand how to publish Pipes as API endpoints?

- Should published Pipes be editable?

- Is it obvious they can visualize the results of a query in a chart?

That’s quite a lot of things to validate! So we created a test script that would try to cover all of those things.

The Test

The actual test started with an explanation of the two main concepts in Tinybird (Datasources and Pipes) so that testers would not be totally lost.

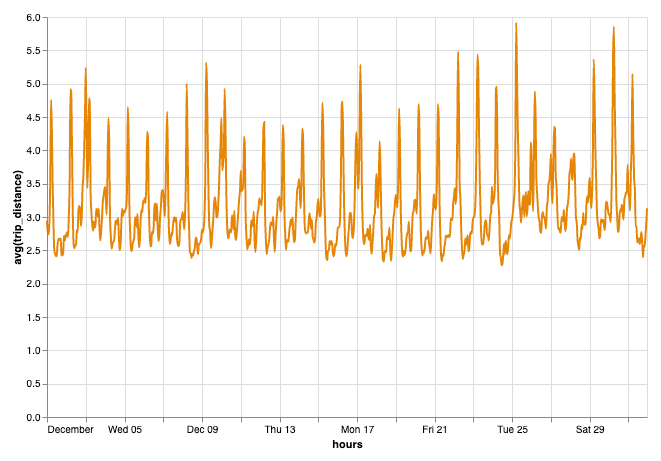

The exercise consisted in creating a chart like the one below through Tinybird using 8 million rows of data we would provide to them (NYC Taxi data for the remote users, Madrid traffic data for the testers that came in person):

In order to achieve it, they would have to import the data into Tinybird, run some queries to clean up the data and prepare it for the chart.

Watch a 2m video below of how you’d go about it; it is very easy to do with Tinybird (especially now after the improvements!):

Some key things we learnt/were reminded of

Most of the things you take for granted are not obvious at all. Users often don’t see links that are not obviously links, or don’t realise that a field is editable unless it is obviously editable. They will eventually learn, but be conscious of how steep you make the learning curve.

Too much magic gets in the way of learning. The first time you are using a product you are learning new skills; sometimes it is best to clearly layout all the steps than to try to speed things up. For instance, we were automatically creating a Pipe for every new Datasource created; we did this because we wanted to make it easy to explore data right away, but this got in the way of people learning about Pipes and how to use them. It was also confusing to them to see things they had not created pop-up in their account.

“Undo” patterns are essential, especially when it involves steps that make things “public” (like making API endpoints public)

How you word things has an impact on the results of the test. If there is a big “EXPORT” button and you ask users to “export data” to a Google Spreadsheet, they will likely find it. But the results are often different if you ask them, “how would you use that data in a Google Spreadsheet?”

We will be detailing in an upcoming blog post what were the main changes we undertook based on the feedback gathered through these tests, so stay tuned for more. Sign-up to the Tinybird newsletter for more.

Would you like to help us with future User Testing?

As we mentioned at the beginning, User testing is going to be a key part of our development process, so if you are keen to help out, drop your details in this form and we will get in touch!