Managed ClickHouse is trending.

The open source DBMS currently has over 43K GitHub stars and rising, and it seems like everybody is adopting it for real-time analytics use cases.

There's good reason for the hype. ClickHouse® is arguably the fastest OLAP database in the world. Its column-oriented storage format and SQL engine make it tremendously effective as a true DBMS for handling large-scale analytics on streaming and event-driven data architectures.

But.

(There's always a but.)

ClickHouse® is known for its complexity. With great power comes great responsibility, and some people simply don't want to be responsible for setting up and maintaining a ClickHouse® cluster.

That doesn't mean you shouldn't use ClickHouse®. You absolutely should, especially if you are trying to build scalable real-time, user-facing analytics over any kind of time series data.

But unless you intend to hunker down and learn the deep internals of this powerful but mercurial database, you probably want some help, likely in the form of a ClickHouse managed service.

And even if you can learn the ClickHouse® deep magic, you still might want a managed service for no other reason than you don't want to maintain infra. That's a perfectly valid reason to choose ClickHouse Cloud or another hosted ClickHouse solution over self-hosting.

If ClickHouse Cloud or another hosted ClickHouse option is what you're after, skip ahead to see some options and how they compare. If you're not convinced that a managed service is for you, keep reading.

Why choose managed ClickHouse® over self-hosted ClickHouse®?

For the same reason you choose "buy" over "build" in any other case: time and money. Building things takes time and costs money. You buy off the shelf to get the economies afforded by someone who has been there before you. For a detailed breakdown of what self-hosting actually costs in 2025, including infrastructure and hidden engineering expenses, see our comprehensive analysis of self-hosted ClickHouse® costs.

But let's dig a bit deeper into what you actually need to build and maintain for an effective self-hosted ClickHouse®:

- High Availability. High Availability (HA) is critical for production databases. If a cluster fails, you need a backup, and you need to gracefully manage failover. To have a high-availability ClickHouse®, you need at minimum 2 ClickHouse® instances + a ZooKeeper implementation + a load balancer.

- Upgrades. ClickHouse® is frequently upgraded (stable packages are released roughly every month, and long-term support (LTS) packages roughly twice a year). Upgrading the database unlocks new features and is required for security reasons, but it also introduces regressions.

When you upgrade a ClickHouse® cluster, you have to consider all running queries, read and write paths, materialized views being populated, etc. This is very non-trivial. - Write/Read Services. ClickHouse® is often used in fast-paced, high-scale data applications involving very high ingest throughput and high query concurrency demanding sub-second latency response times.

When using ClickHouse®, you have to consider ancillary services to the "left and right" of the database. How will you handle streaming ingestion when ClickHouse® prefers to batch writes? How will you expose the query engine to your applications?

These things take time to build and money to host. - Observability. Databases don't exist in a vacuum. They need to be monitored, and ClickHouse® is no exception.

There's more here. ClickHouse® is complex, the learning curve is steep, and it may take too long to fully harness its power, especially for smaller engineering teams.

Current options for Managed ClickHouse®

Fortunately for those who don't want the headaches of scaling their own ClickHouse® cluster, there are a growing number of managed ClickHouse® options. You can find an exhaustive list further below, but three of the most popular managed ClickHouse® products currently on the market are (in no particular order):

- ClickHouse® Cloud

- Altinity

- Tinybird

Below you'll find a quick comparison of each of the three in terms of use cases, developer experience, cost, and critical features.

ClickHouse® Cloud

The "official" managed ClickHouse®, created by ClickHouse®, Inc., the maintainers of the OSS ClickHouse® project.

Highlights

- Available on AWS, GCP, and Azure

- Automated scaling on prescribed compute range

- Automated replication and backups

- Automatic service idling when inactive

- Low data storage costs backed by cloud-native architecture and object storage

- Interactive SQL Console on the Web UI

- Visualize any query result as a chart

- Terraform provider for automating infrastructure management

- Direct access to the ClickHouse® database

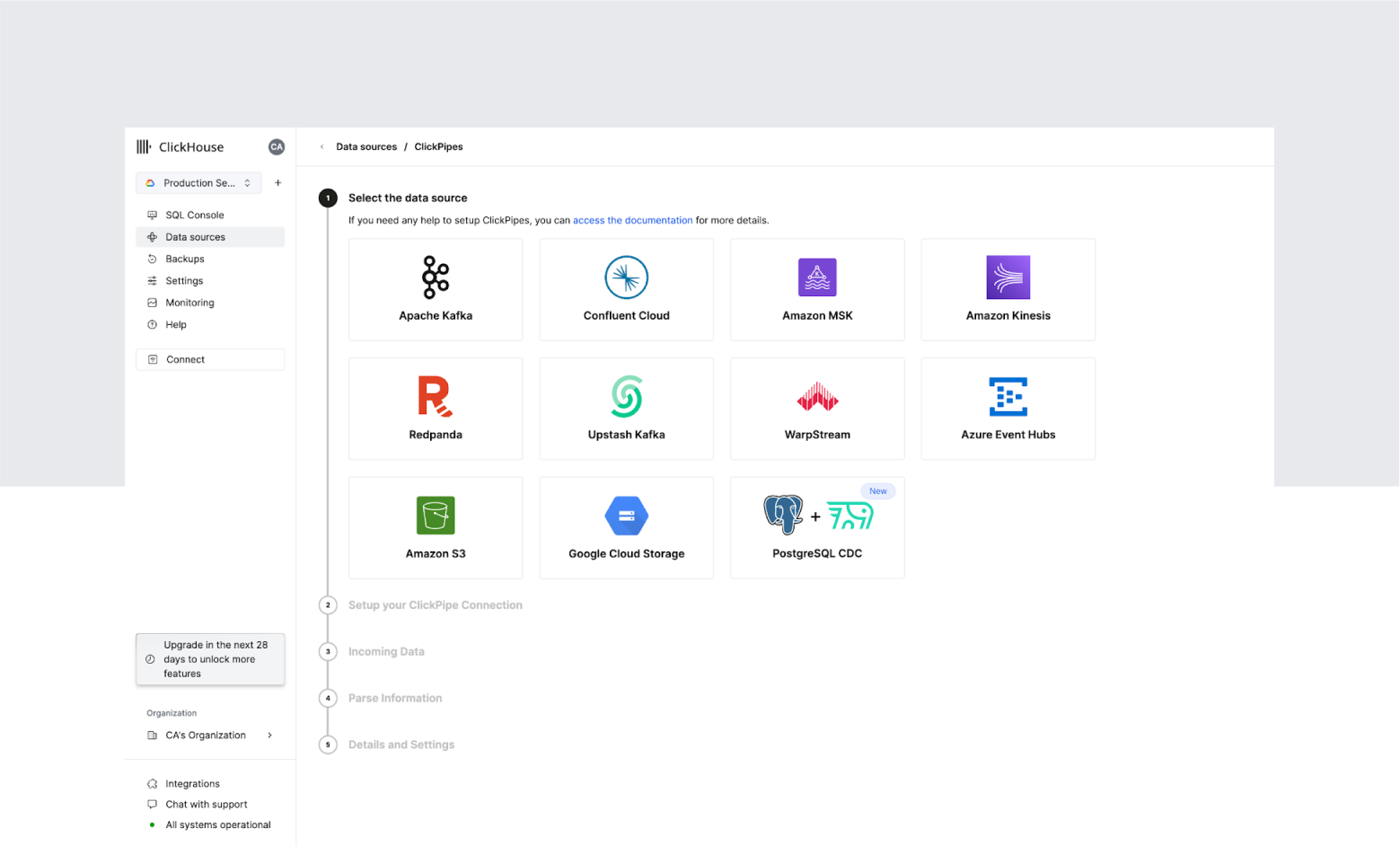

- ClickPipes for managed ingestion from streaming sources including Apache Kafka, Confluent, Postgres + Debezium CDC (PeerDB acquisition in July 2024)

- Observability capabilities through HyperDX acquisition (March 2025)

- Several clients for common languages to build your backend over ClickHouse®

- SOC 2 Type II compliant

Cost

Pricing for ClickHouse® Cloud is based on your chosen plan and compute size.

Development plans are pinned at 16GiB 2vCPU and range from $1 to $193 per month depending on storage ($35/TB compressed) and compute ($0.22/active hour).

Production Plans can include from 24 GiB 6vCPU to 3600GiB 960 vCPU compute. Pricing is based on storage (~$50/TB compressed) and compute ($0.75/compute unit-hr) with costs ranging from ~$500 per month to $100,000 depending on cloud, compute units, and storage.

Dedicated infrastructure is also available at customized prices.

You can check pricing for ClickHouse® Cloud and calculate your price based on storage and compute here.

Developer Experience

ClickHouse® Cloud is a classic "database-as-a-service", providing a clean interface to a hosted database solution. The developer experience demands a level of ClickHouse® expertise, as most of the non-infrastructure database settings are not abstracted. Actions like creating, renaming, or dropping tables, populating materialized views, etc. require knowledge of ClickHouse®'s flavor of SQL.

ClickHouse® Cloud also boasts a solid amount of integrations, both managed and supported through community development. The recent introduction of ClickPipes allows for streaming data ingestion from popular sources like Apache Kafka, Confluent, Postgres + Debezium CDC, and others without setting up additional infrastructure.

For developers keen on building an analytics API service on top of ClickHouse®, ClickHouse® Cloud does offer "query lambda" endpoints in beta. With this feature, you can create static HTTP endpoints from saved queries, however these don't benefit from the same performance characteristics as the underlying database. For production-ready APIs, you'll need to build an additional backend service.

Takeaways

ClickHouse® Cloud is a flexible and performant way to host a ClickHouse® database on managed infrastructure. It provides great tools for directly interfacing with the database itself, with some additional infrastructure features (ClickPipes, endpoints, etc.) that extend beyond the database itself.

Altinity

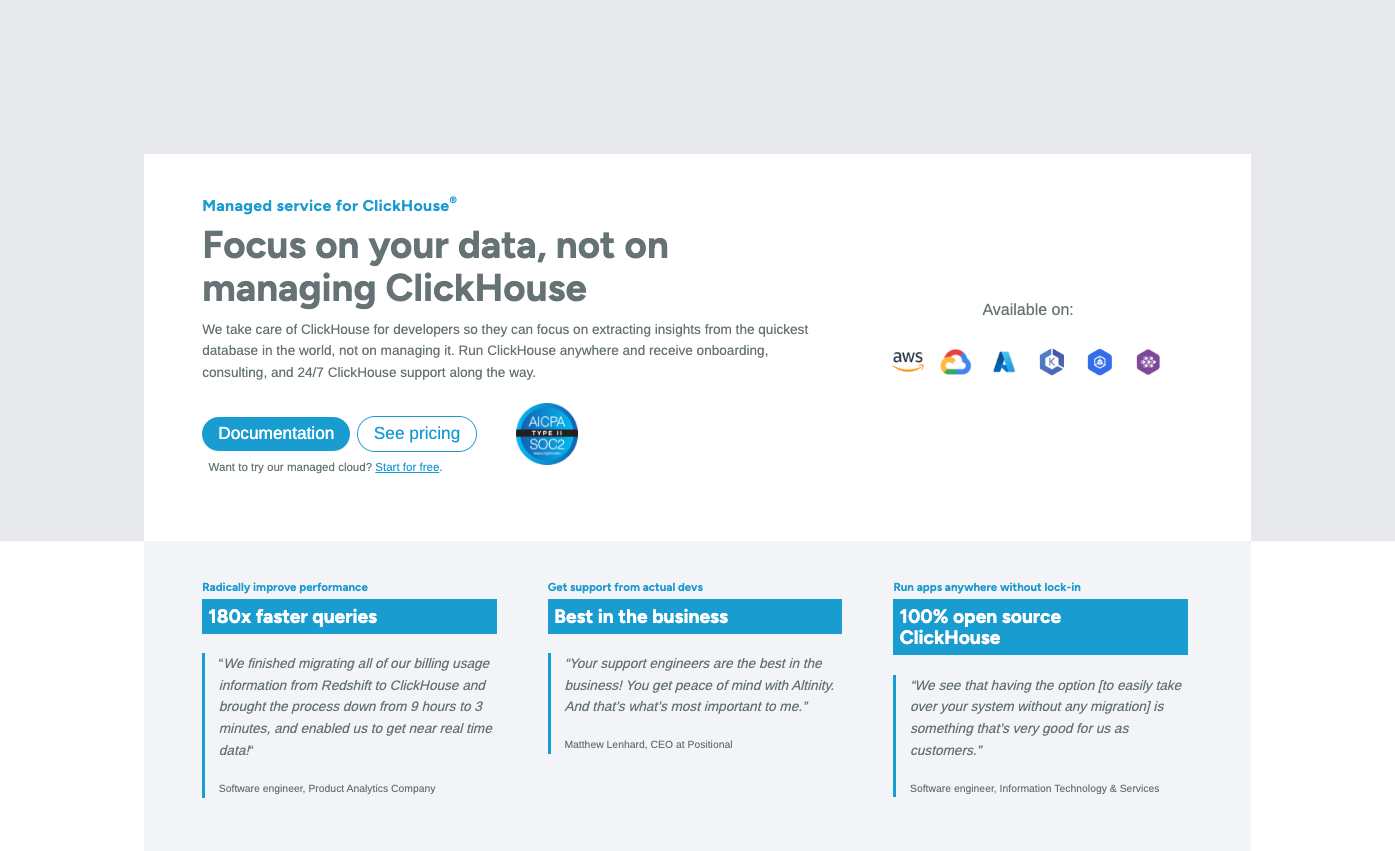

Altinity.Cloud provides managed ClickHouse® deployments on both hosted cloud and VPC. In addition, Altinity offers ClickHouse® consulting services and maintains several open source ClickHouse® projects such as their Kubernetes Operator for ClickHouse®.

Highlights

- Available on AWS, GCP, and Azure or via VPC on your own cloud

- 24/7 ClickHouse® expert support services

- 100% open source ClickHouse® - no forks or add-ons

- Dedicated Kubernetes environment for every account

- Choose any VM or storage type

- Scale compute capacity up/down and extend storage without downtime

- Add shards and replicas to running clusters

- Built-in availability and backups

- SOC 2 Type II certified

Cost

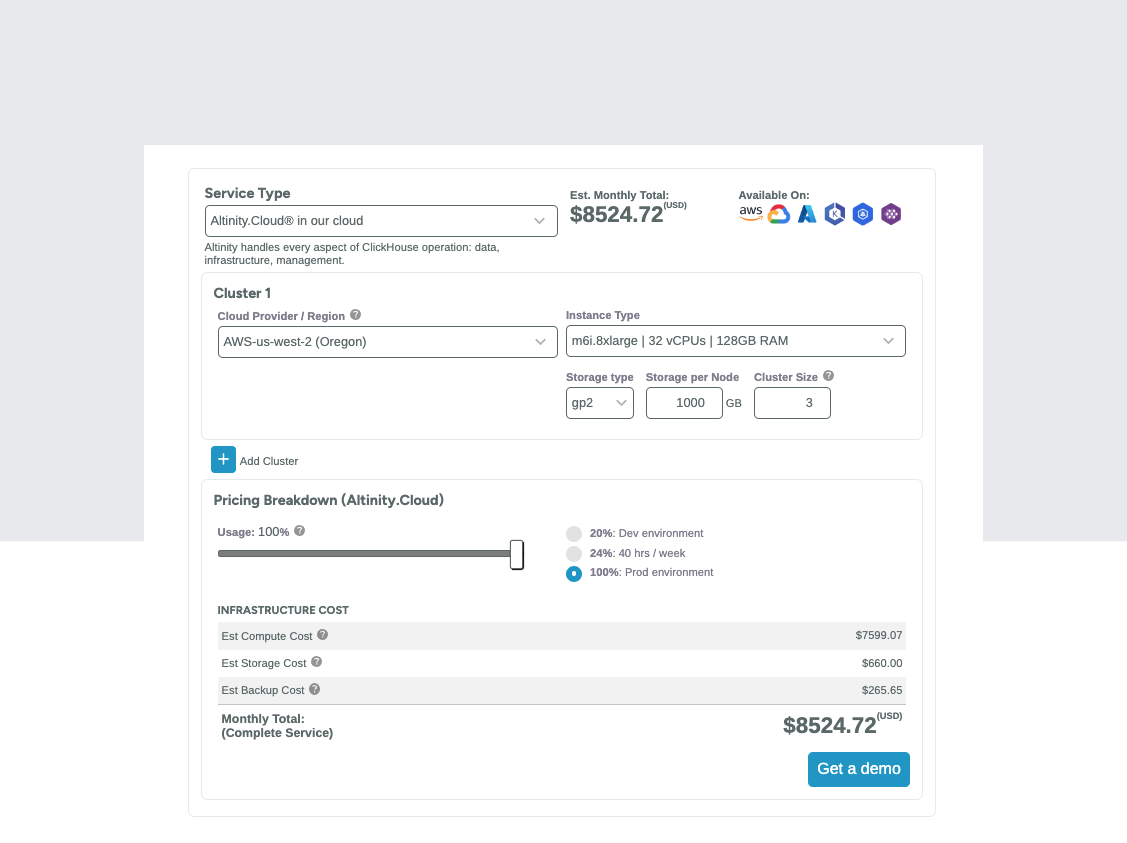

Unlike ClickHouse® Cloud, Altinity does not charge on a usage-based model. Altinity.Cloud is priced based on allocated compute and storage (non-VPC) regardless of usage. Amongst these three, pricing from Altinity.Cloud is the most predictable, as it will only change based on allocation, not usage.

Pricing is based on storage ($220 - $374/TB per month) and allocated compute (instance type + number of clusters) based on activity.

For example, a development instance with 20% usage on a 16GiB 4 vCPU GCP cluster with 100 GB would cost about $110 per month( ~$75/month for compute, $22/month for storage, and ~$15/month for backups).

A production instance (100% active) on a 3-cluster m6i.8xlarge 32 vCPUs 128GB RAM instance in AWS us-west-2 with 1 TB of storage would cost about $8,500 per month (~$7,600 compute, ~$660 storage, ~$260 for backups).

You can get more info on Altinity.Cloud pricing from their pricing calculator.

Developer Experience

Of the three managed ClickHouse®s explored here, Altinity.Cloud will provide the most "raw" ClickHouse® experience. Altinity is certainly for the ClickHouse® enthusiast who wants to deeply understand the database and handle most database operations (with support, of course).

You'll get amazing support with dedicated response time SLAs; Altinity's support engineers are known for their ClickHouse® expertise and 24/7 support services to help you manage your instance.

Altinity provides 100% open-source ClickHouse®, hosted. There are no ancillary services or infrastructure to help manage ingestion, visualization, or application/backend development. This is a pure, hosted ClickHouse®.

Takeaways

Altinity.Cloud is great for those who want to learn and own a ClickHouse® database - either on managed infrastructure or in VPC - with support from some of the best ClickHouse® practitioners in the world. It's not for fast-moving developers who want a ClickHouse® abstraction; it's built for enterprise-level engineers who know - or want to commit to learning with support - how to manage this database at scale.

Tinybird

Unlike ClickHouse® Cloud and Altinity.Cloud (among other managed ClickHouse®s) Tinybird isn't a traditional managed ClickHouse®. It's not simply hosted storage + compute with a nice web UI for interacting with the underlying database client.

Rather, Tinybird is a developer data platform for building analytics on top of the open source ClickHouse®. In addition to providing the things you'd expect from a managed database - hosting, auto-scaling, automatic upgrades, observability, high availability SLAs, etc. - Tinybird provides services to the "left and right" of the database to support data ingestion and application integration.

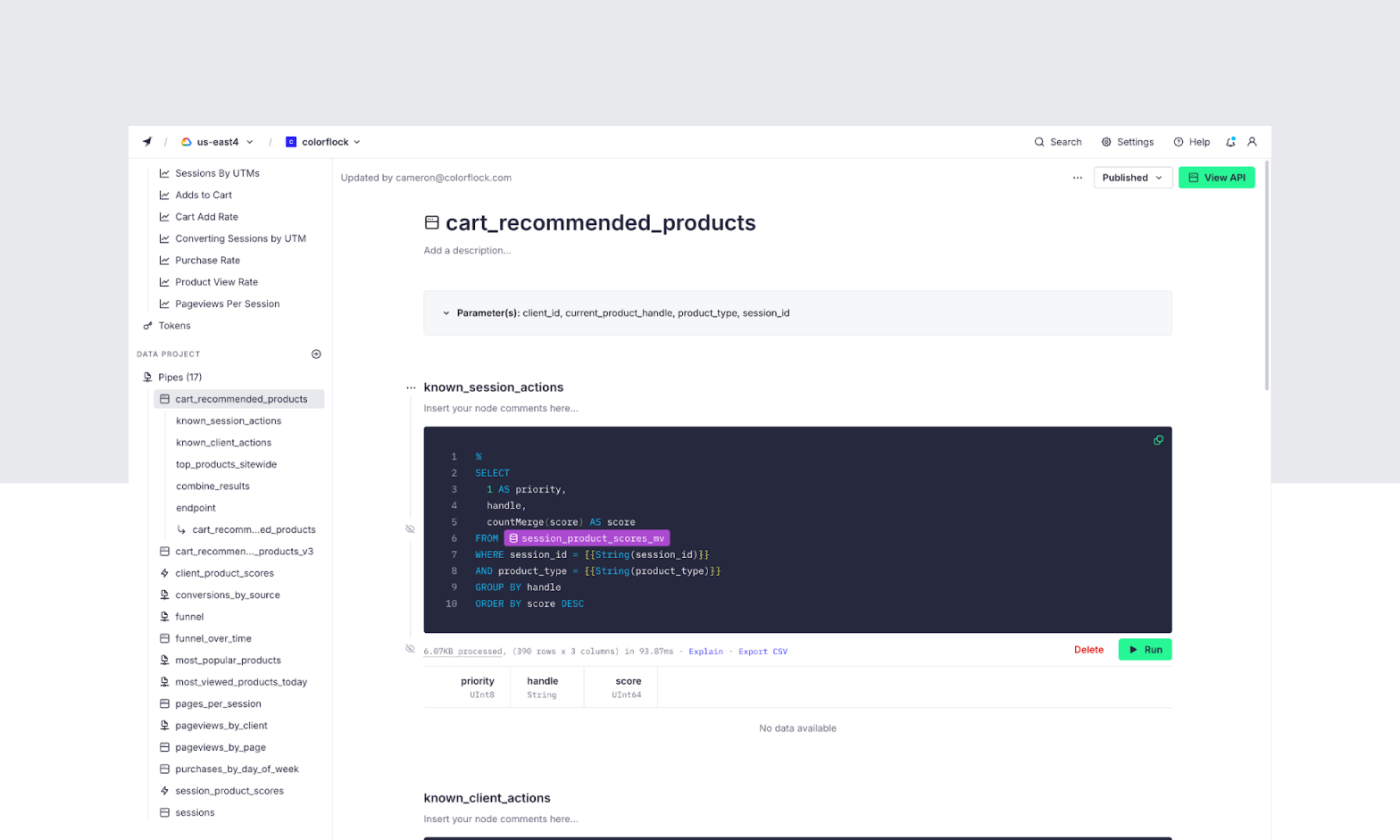

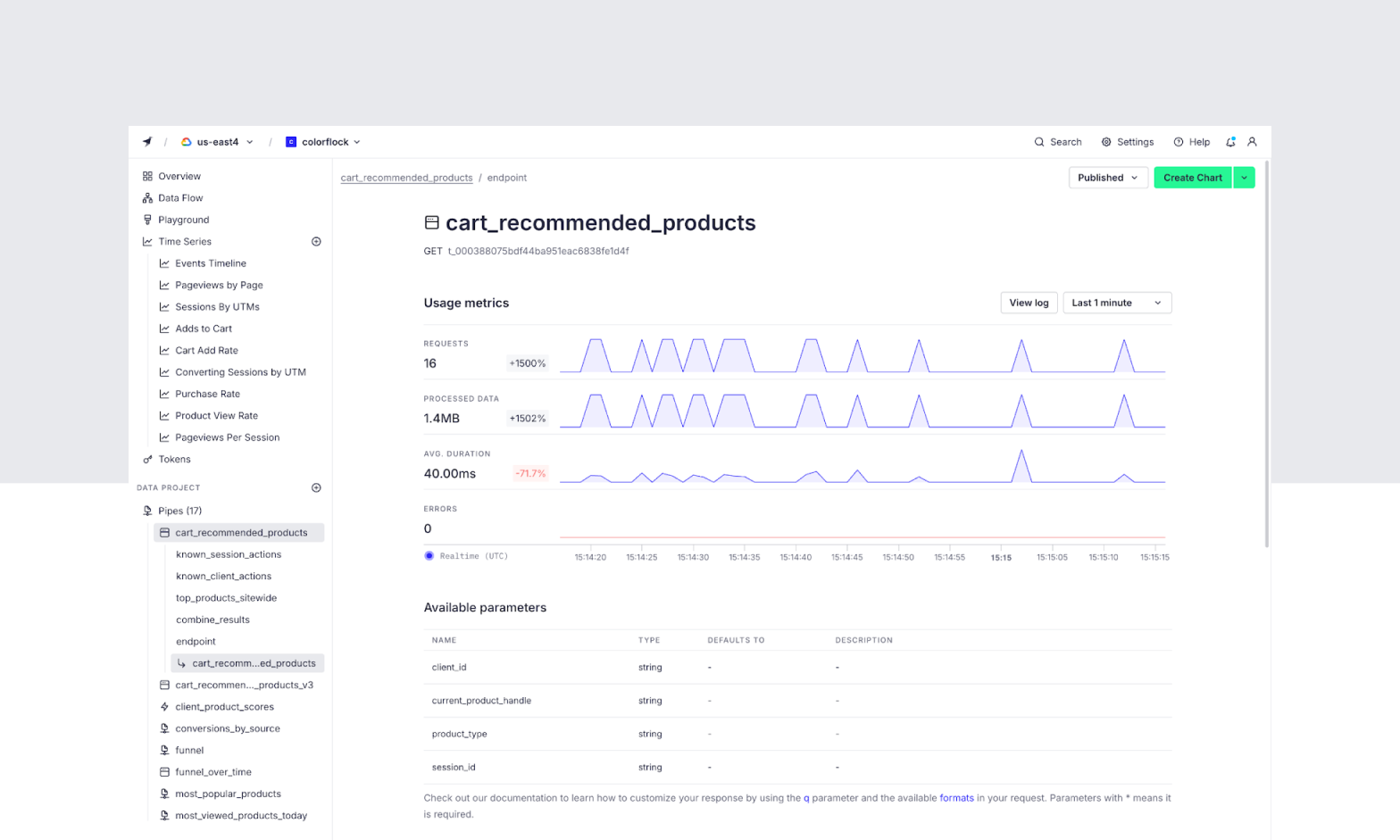

Perhaps the most well-known benefit of Tinybird is its "instant API" experience. You can instantly publish any SQL query as a dynamic and scalable REST Endpoint complete with OAS3.0 specs. Unlike ClickHouse® Cloud's beta endpoints, Tinybird's API Endpoints have been an integral part of the platform since its first public beta, and are well-supported at scale with minimal latency. For those who want to avoid building a backend service on top of ClickHouse®, Tinybird can provide a huge advantage.

Highlights

- Same database performance as ClickHouse® Cloud and Altinity

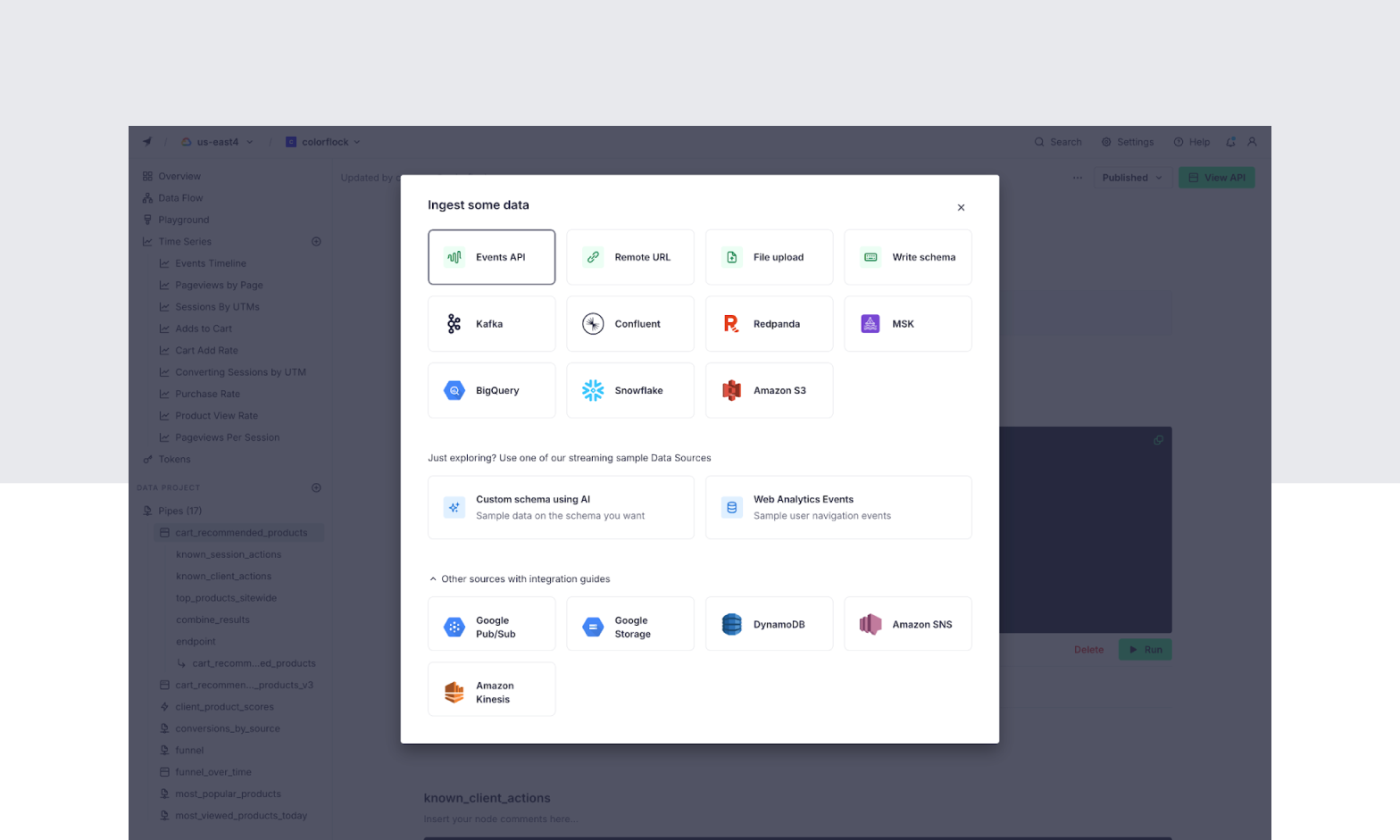

- Managed data source connectors for Kafka, S3, GCS, DynamoDB, etc.

- A managed HTTP streaming endpoint (Events API with 1000k EPS, 20 MB/s, 100 MB max request size limit)

- Managed write service for streaming ingestion

- Managed import/export/transform job queues

- A notebook-style query interface to break up complex queries into manageable nodes

- Explorations - AI-powered data exploration with chat, free queries, and generative UI Series modes (released September 2025)

- LLM usage tracking across Workspaces and Organizations with detailed metrics on requests, costs, and tokens

- Service data sources for advanced monitoring and debugging

- A managed API service

- Slack support community

- Static access tokens for resource management

- Signed JWTs for API Endpoints with customizable scopes and rate limits

- Single-click workflow to publish SQL queries as scalable REST Endpoints with automatically generated OpenAPI specs and dynamic query parameters.

- Automatically generated observability tables that log every write, read, and job

- A plain text file format to define data projects as code

- A full-featured CLI and git integration to manage data projects in production with CI/CD

- Workspaces to group resources and enable role-based collaboration

- SOC 2 Type II Compliant

- Self-managed infra (free)

Cost

Tinybird has a time-unlimited Free plan that does not require a credit card.

As of January 31, 2025, Tinybird updated its pricing model to be more predictable and flexible. The new pricing is based on vCPU hours rather than processed data. Users can choose from scalable Developer plan sizes and scale as needed. This model provides more control over costs and allows for better resource planning. Legacy plans based on storage and processed data were sunset on April 29, 2025.

Tinybird offers Enterprise plans with volume discounts, infrastructure-based credits, optional dedicated infrastructure, and support/availability SLAs.

More info is available on Tinybird's public pricing page and billing docs. For an in-depth cost analysis comparing Tinybird and ClickHouse® Cloud across multiple real-world use cases, see our detailed cost comparison guide.

Developer Experience

Of all the managed services for ClickHouse®, Tinybird provides the highest level of abstraction designed to reduce developer friction.

It exposes certain ClickHouse® features, like the SQL language and the definition of table schemas, but it does not provide direct access to the underlying ClickHouse® database. For those who want a pure ClickHouse® experience, this may be a turnoff. But it also means that it's much harder to brick your database with Tinybird.

Tinybird is well known for its incredible developer experience. The core Tinybird experience - from ingesting data to integrating a real-time API into your production app, can be accomplished in just a few minutes.

For those who want access to the performance of ClickHouse® without having to learn how to manage the database itself, Tinybird is a boon. There's still some need to learn elements of ClickHouse®, for example its SQL syntax and how materialized views work on the database, but most of the database management is abstracted into UI, CLI, and API.

Perhaps the biggest differentiator for Tinybird in its developer experience is the services it provides outside of the database, namely API hosting, managed job queues, its HTTP streaming endpoint, managed ingestion connectors and infrastructure, and its git integration for automated CI/CD workflows.

These features remove the burden of setting up additional infrastructure on top of the database. This results in a great serverless experience for those who want a scalable analytics backend without worrying about infrastructure, compute sizes, etc.

Takeaways

Put simply, Tinybird is for those who want the performance of ClickHouse® without having to learn and scale another database. It's made for developers who want to ship use cases that benefit from ClickHouse®'s speed without needing to spin up the ingestion pipelines, the database cluster, and the backend to support an analytics API.

You can learn more about the distinctions between Tinybird and ClickHouse® here.

Advanced Deployment & Networking Capabilities

Deploy inside your own cloud account to keep full ownership of your infrastructure. Choose AWS, GCP or Azure and run the service within your existing VPC or VNet, maintaining complete isolation from public traffic.

All ingress and egress connections stay under your security policies and firewalls, ensuring that no data ever leaves your private environment.

This configuration aligns perfectly with organisations that have strict governance, compliance, or data-sovereignty requirements while still benefiting from the ease of a managed solution.

With this setup, you control the network, you own the data, and you define the perimeter, yet operations, scaling and maintenance remain fully managed.

There’s no need to provision VMs, clusters or load balancers, and you still receive automatic updates and monitoring.

Regional Choice and Latency Optimisation further enhance performance.

Selecting the region closest to your users or data sources dramatically reduces network hops and end-to-end latency.

Whether your analytics workloads live in Europe, North America or Asia, you get identical SLA guarantees, the same control plane interface, and consistent tooling.

Teams distributed across several time zones can work with the same UI, API, and CLI without any regional fragmentation.

The result is a truly global experience with local performance.

Private Connectivity through Peering and Private Links ensures end-to-end security.

By leveraging VPC Peering, VNet Peering or Private Endpoints, your ingestion pipelines, microservices, and BI tools connect directly to the analytics cluster over private network channels.

There is zero exposure to the public Internet, greatly reducing the surface for attacks or accidental leaks.

Every packet of data travels inside your network, protected by your routing rules and access controls. For many enterprises, this configuration is essential to pass security audits and compliance reviews.

Bring-Your-Own-Cloud (BYOC) Deployment combines autonomy and convenience. Learn more about different ClickHouse® deployment options.

You can use your existing cloud credits, apply enterprise discount programmes, and maintain full cost visibility through your cloud provider’s billing console.

The managed service operates with minimal permissions, just enough to orchestrate nodes and monitor metrics.

This model avoids vendor lock-in, lets you continue using your own monitoring and security tooling, and ensures that data residency and ownership remain entirely under your domain.

Multi-Region and Geo-Replication Support empower global operations. Deploying clusters across multiple regions means your queries run close to where the data lives, reducing response times and enhancing the user experience.

Geo-replication maintains synchronised datasets across continents, enabling active-active or active-passive architectures for redundancy.

If one region becomes unreachable, queries automatically route to a healthy replica elsewhere.

This design brings business continuity and resilience without manual intervention.

From a developer’s perspective, these deployment and networking capabilities translate into fewer dependencies, less infrastructure to maintain, and greater agility.

There is no need to open tickets to network teams, no manual configuration of load balancers or firewalls**, and no late-night maintenance windows to modify subnets or IP ranges.

Everything is abstracted behind a clean API and CLI, yet remains transparent enough for audits and security reviews.

Ultimately, this model delivers maximum control with minimum effort.

You can comply with internal security standards, deploy in any region worldwide, scale automatically, and still enjoy a fully managed experience that handles upgrades, monitoring, and fault recovery for you.

Resilience, Upgrades & Cost Efficiency

Automated Backups and Point-in-Time Recovery (PITR) provide strong protection against data loss. Full and incremental backups occur on schedule and are stored securely in object storage, accessible only to authorised identities.

If a table is dropped or a dataset becomes corrupted, you can restore instantly to a specific timestamp.

This safety net removes the need for custom backup scripts or manual snapshot management. You gain enterprise-grade data protection without operational overhead.

High-Availability Clusters and Multi-AZ Replication guarantee continuity during infrastructure incidents.

Each cluster spans multiple availability zones, maintaining synchronous replicas of data and metadata.

When a zone outage occurs, the service automatically fails over to healthy replicas. The switch happens in seconds, with no manual intervention and no interruption to queries or streaming ingestion.

For mission-critical analytics, this means uninterrupted dashboards, APIs, and insights even during regional events.

Continuous Replication and Fail-over Mechanisms extend beyond single-region HA.

For globally distributed businesses, clusters can replicate across regions so that if one geography suffers a full-region failure, traffic is transparently redirected elsewhere.

All this happens under the same control plane, using managed replication queues and version-checked snapshots. It’s disaster recovery as a service, with no scripts or manual playbooks.

Live Schema Migrations and Version Control are another cornerstone. Changing table structures in massive datasets is traditionally risky, but here it becomes routine. You can apply new schemas on production tables without downtime.

The system performs background migrations, copying and transforming data while new writes continue.

Old versions stay intact until the new schema is validated, guaranteeing zero data loss and zero downtime.

Upgrading ClickHouse® versions follows the same philosophy. You can pin your current version for stability or enable rolling upgrades for continuous improvement.

During an upgrade, new nodes are deployed with the latest version, replication catches up, queries drain from the old nodes, and then those are retired.

This rolling approach provides predictable upgrades and stable performance.

Predictable Cost and Cloud Credit Alignment mean you always know what you’re paying for.

Because the service can run within your own cloud subscription, you can apply enterprise discounts, use existing reserved capacity, or leverage committed-use agreements.

There are no hidden surcharges or opaque consumption multipliers. Storage of cold data and backups in your object-storage keeps costs transparent and under your direct control.

For long-term archives, you can set lifecycle policies that automatically move older backups to lower-cost classes such as S3 Glacier or Coldline.

Your storage grows linearly with your data, and you can always retrieve snapshots instantly when needed.

The cost model becomes simple, auditable, and scalable.

Scalable Infrastructure and Autonomous Scaling ensure performance under any workload. As your queries or ingestion volumes increase, the system automatically performs vertical scaling (adding CPU and RAM) or horizontal scaling (adding replicas).

The scaling algorithm uses real-time metrics—CPU usage, query latency, and ingestion rate—to adjust capacity proactively.

The make-before-break principle ensures new capacity is online before any existing node is removed, eliminating downtime.

During busy periods, clusters expand seamlessly; during quiet hours, they shrink to save cost. Developers no longer need to predict usage patterns or schedule manual resizes.

The system keeps latency low, throughput high, and bills efficient.

Behind the scenes, advanced load-balancing and query-routing mechanisms distribute requests evenly across replicas.

This avoids hotspots, ensures predictable response times, and allows transparent caching of frequent queries.

The architecture separates compute and storage, so heavy analytical queries can run independently of ingestion workloads without contention.

Observability and Proactive Monitoring complement resilience. Every query, ingestion event, and system metric — including those coming from Internet of Things devices — is captured automatically.

You can inspect these through pre-built dashboards or query the logs directly using SQL.

Alerting thresholds can be defined for latency, error rates, or ingestion backpressure, enabling real-time visibility into system health.

Governance and Access Control features reinforce operational safety.

Role-based access control (RBAC) with token-scoped permissions and row-level security lets you expose APIs safely to external applications or partners.

Audit logs record every configuration change and query, supporting full traceability and compliance with standards such as SOC 2 Type II and HIPAA.

No Vendor Lock-In at the Data Level remains a core principle. The service runs standard ClickHouse®, stores all data in open formats on object storage, and exposes fully compatible SQL and HTTP interfaces.

You can export, migrate, or replicate your data at any time using standard tools.

This openness ensures that your analytical investment remains portable and future-proof.

Operationally, the combination of automation, resilience, and transparency eliminates the need for traditional runbooks or manual interventions.

Your engineers can focus on building features, dashboards, and real-time experiences, rather than firefighting infrastructure issues.

24/7 Managed Operations and Support complement the technology stack. Experienced engineers monitor clusters continuously, receive automatic alerts, and act before performance degradation affects users.

The same team assists with query optimisation, ingestion tuning, and schema design.

For development teams, this means direct access to experts who understand both ClickHouse® internals and real-world workloads.

Service-Level Objectives (SLOs) cover availability, durability, and response times. Multi-AZ replication combined with automated backups allows ambitious SLOs for uptime and data recovery.

These targets are visible in the control panel and can be exported to your observability stack.

Meeting them becomes a matter of configuration, not manual enforcement.

In the long run, this level of automation transforms the way teams operate. Projects that previously required dedicated infrastructure teams now run with just a few developers and data engineers.

Releases become faster, schema changes safer, and analytics pipelines more reliable.

Costs are predictable, scaling is elastic, and downtime is virtually eliminated.

Every technical improvement reinforces the same outcome: speed of execution and trust in data.

Developers can experiment, deploy, and iterate confidently, knowing the system will scale, recover, and stay within budget.

For teams seeking practical insights from production workloads, this architecture echoes lessons shared in What I learned operating ClickHouse®, offering guidance on stability, scaling, and maintenance at scale.

A list of managed ClickHouse® services

Last updated October 2025

- Tinybird

- ClickHouse® Cloud

- Altinity

- Aiven Managed ClickHouse®

- Yandex Cloud Managed Service for ClickHouse®

- Elestio Managed ClickHouse®

- Nebius ClickHouse® Cluster

DoubleCloud ClickHouse® as a ServiceShut down March 2025Propel DataShut down March 2025

How to choose a managed ClickHouse® service

Choosing the right managed ClickHouse® depends on your appetite for learning ClickHouse® and how much abstraction you want. Every managed ClickHouse® mentioned here will provide a comparable level of performance when properly configured. The difference will be in the features provided, the pricing structure, and the level of abstraction on top of the database.

| Feature | ClickHouse® | Altinity | Tinybird |

|---|---|---|---|

| Multi-cloud availability | ✔ | ✔ | ✔ |

| Bring Your Own Cloud | AWS in beta | ✔ | No BYOC, but free self-managed. |

| Usage-based Pricing | ✔ | Limited | ✔ |

| Infrastructure-based Pricing | ✔ | ✔ | ✔ |

| Free Trial | 30 days | 30 days | Unlimited |

| Self-Service Startup | ✔ | X | ✔ |

| High-Availability | ✔ | ✔ | ✔ |

| Automatic Backups | ✔ | ✔ | ✔ |

| Automatic Database Upgrades | ✔ | X | ✔ |

| Collaborative Workspaces | X | X | ✔ |

| IP White/Blacklisting | ✔ | ✔ | X |

| Managed Streaming Connectors | ✔ | Limited | ✔ |

| Managed Batch Connectors | X | X | ✔ |

| Web UI and SQL Console | ✔ | ✔ | ✔ |

| CLI | X | X | ✔ |

| API | ✔ | ✔ | ✔ |

| Connect to ClickHouse® Client | ✔ | ✔ | X |

| Managed HTTP Streaming | X | X | ✔ |

| Managed Import/Export Queues | X | X | ✔ |

| Terraform Provider | ✔ | ✔ | X |

| Hosted API Endpoints | X | X | ✔ |

| Endpoint Token Management | X | X | ✔ |

| Support for Custom JWTs | X | X | ✔ |

| Native Charting Library | X | X | ✔ |

| Git integration | X | X | ✔ |

| SOC 2 Type II Certified | ✔ | ✔ | ✔ |

Services such as Altinity.Cloud provide a very pure ClickHouse managed experience on hosted infrastructure. Some value this flexibility and are willing to spend time understanding the database itself.

ClickHouse® Cloud can provide a nice middle ground for developers who want slightly more abstraction but still want full access to the underlying database. ClickHouse® Cloud is great - for example - in conjunction with business intelligence tools that can directly access the database client and run queries for visualization. But, if your goal is to build an app backend on top of ClickHouse®, you'll still have to manage that yourself.

Tinybird is for developers who want to ship user-facing analytics features to production, faster. It comes with a higher level of abstraction that limits direct database access, but in doing so it reduces speed to market when building on top of ClickHouse®. Tinybird offers additional infrastructure and services that other managed ClickHouse® options do not, extending the functionality beyond the database while still harnessing ClickHouse®'s performance.

For a detailed comparison of how Tinybird and ClickHouse® Cloud differ in infrastructure management, developer experience, and pricing, see our comprehensive guide on the key differences between Tinybird and ClickHouse® Cloud. For a comprehensive comparison of ClickHouse® alternatives including managed services, cloud data warehouses, and real-time OLAP engines, see our honest comparison of the top ClickHouse® alternatives in 2025. If you're specifically evaluating alternatives to ClickHouse® Cloud, read our detailed comparison of ClickHouse® Cloud alternatives.

To get started with Tinybird, you can sign up for free and start building. Whether you need managed ClickHouse or a complete real-time analytics platform, Tinybird has you covered.