Our friends at Estuary have just released Dekaf, a Kafka protocol-compatible interface to Estuary Flow. This is great news for Tinybird users; you can now use Tinybird’s existing Kafka Connector to consume CDC streams from the many, many data sources that Estuary integrates!

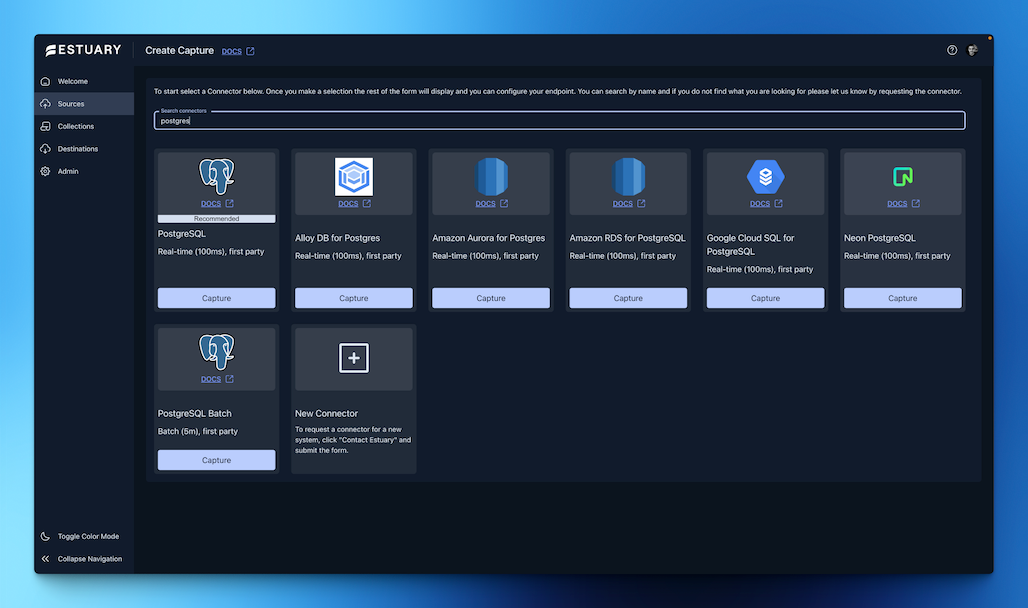

Estuary’s data sources cover a huge range of the data ecosystem, from the world’s most popular database, PostgreSQL, to NoSQL stores like MongoDB and warehouses like Snowflake and BigQuery. There are too many to list here, so check out Estuary’s connector page to find the connector you need!

Together, Estuary and Tinybird offer an incredible combination of price, performance, and productivity. Both platforms solve the developer experience problems common across the data ecosystem without compromising the computational efficiency of the underlying tech. In Tinybird’s case, that’s because we use the best-in-breed, open source database ClickHouse. For Estuary, it’s their open source Flow.

Like many, we had a warehouse-centric data platform that just couldn't support the latency and concurrency requirements for user-facing analytics at any reasonable cost. When we moved those use cases to Tinybird, we shipped them 5x faster and at a 10x lower cost than our prior approach.

Guy Needham, Staff Engineer at Canva

You can sign up for Tinybird and sign up for Estuary for free. No credit card is required. Need help? Join the Tinybird Slack Community and the Estuary Slack Community to get support with both products and the integration.

Continue reading to learn more about using Tinybird and Estuary together.

How it works

Estuary has released Dekaf, a new way to integrate with Estuary Flow that presents a Kafka protocol-compatible API, allowing any existing Kafka consumer to consume messages from Estuary Flow as if it were a Kafka broker. Thanks to Tinybird’s existing Kafka Connector, that means Tinybird can now connect to Estuary Flow directly.

Tinybird provides various out-of-the-box Connectors to ingest data, with the most popular being Kafka, S3, and HTTP. While Tinybird also supports first-party integrations with tools like DynamoDB, Postgres, and Snowflake, it doesn't currently support the same breadth of data source integrations offered by Estuary. Which is why we’re so excited not only about the integration with Estuary Flow, but with how it's built, too.

Dekaf is yet another example of the Kafka protocol becoming bigger than Kafka itself (something similar can also be said about the S3 API and the glut of S3-compatible storage systems available today). This has some pretty nice knock knock-on benefits. For you, it makes your data pipelines more consistent and easy to integrate with your existing stack and reduces vendor lock-in, as you can take advantage of the Kafka API’s wide support in data tooling.

For Tinybird and Estuary, it means neither of our engineering teams needs to spend time building vendor-specific API integrations. Instead, we can focus on building the best real-time analytics platform, and Estuary can focus on solving real-time data capture.

We’re huge fans of how Estuary is solving that problem. Real-time ETLs allow you to maintain data freshness in your pipeline and eliminate stale data with diminished value. When data arrives faster, it gets processed faster. When it gets processed faster, it provides answers faster. Fast data begets fast and responsive features and services that increase customer trust and adoption, turning your data into a revenue generator (instead of a cost center).

Get started with CDC using Tinybird and Estuary Flow’s Dekaf

This example will talk you through setting up a PostgreSQL Change Data Capture pipeline that streams data into Tinybird in real time. You can also read more on the Tinybird docs, or see Estuary’s docs.

Create PostgreSQL Capture in Estuary Flow

With your PostgreSQL running and configured for CDC, create a capture in Estuary Flow.

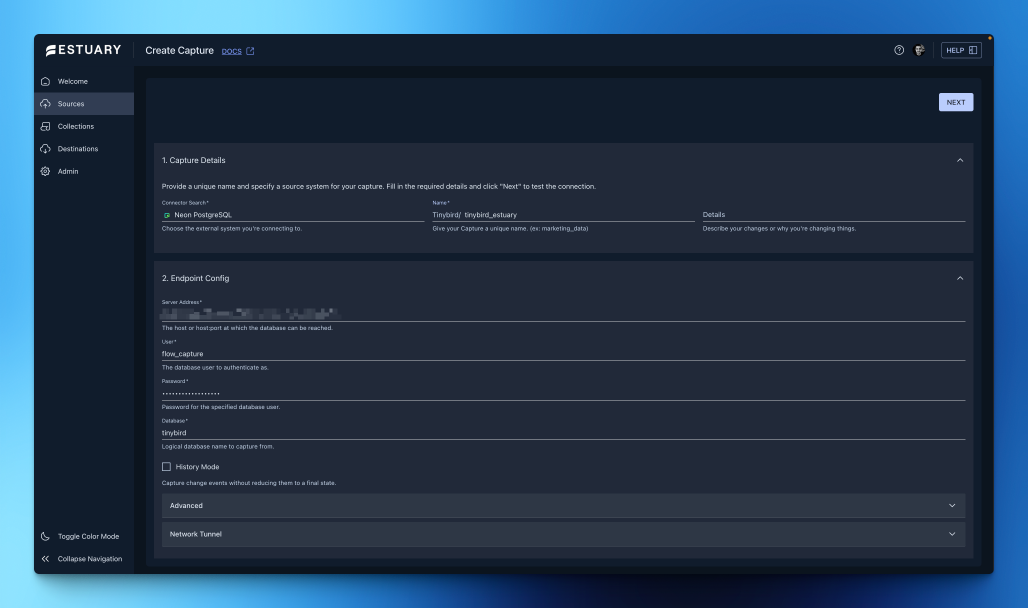

Configure the Endpoint by entering your Postgres server address, user, password, and database.

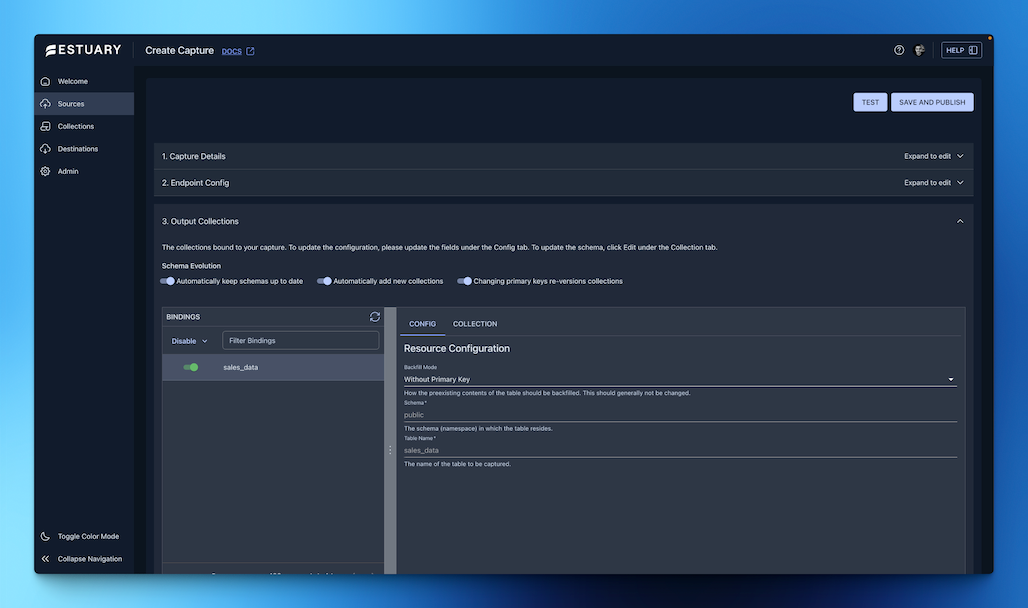

Select the tables that you want to sync by defining collections.

Press Save & Publish to initialize the connector. This will kick off a backfill first, then automatically switch into an incremental continuous change data capture mode.

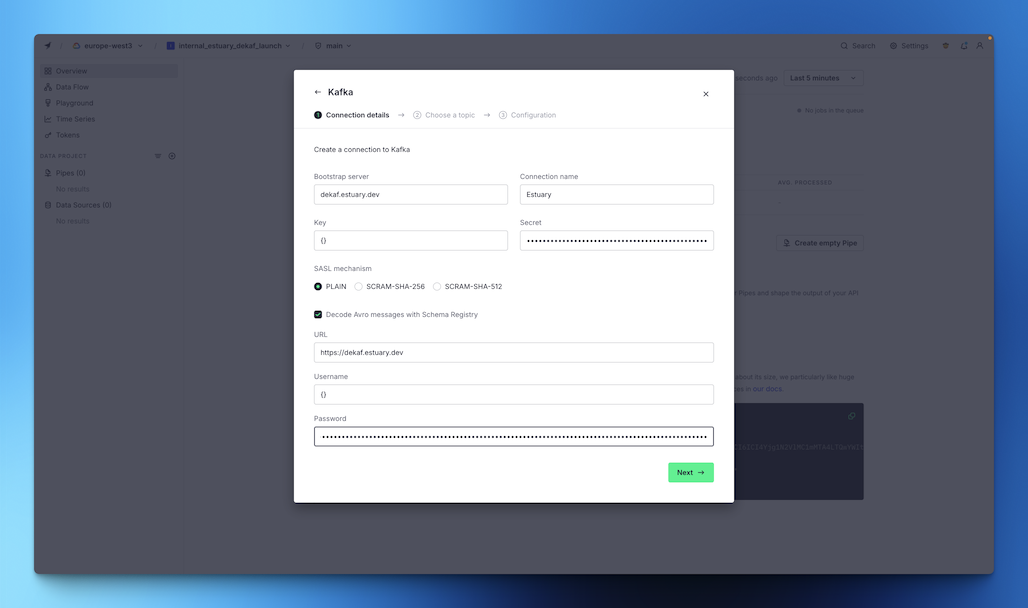

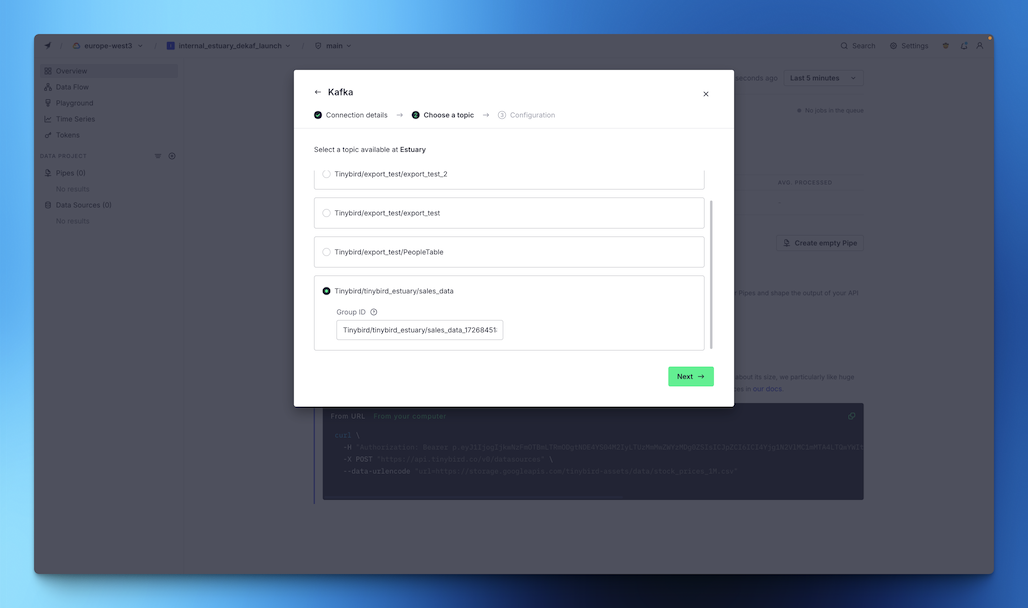

In Tinybird, create a Kafka Data Source and configure it with the Dekaf settings. You’ll need the broker details from the Dekaf docs, and a new refresh token from your Estuary admin dashboard.

Select the Estuary Flow collection you wish to ingest into Tinybird from the list.

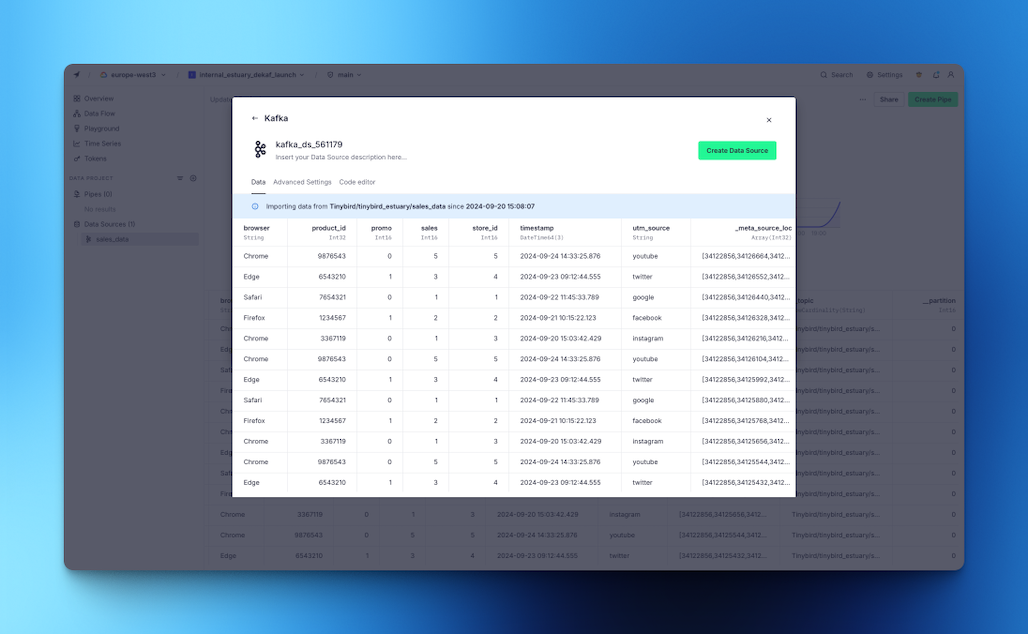

Preview the data, and click Create Data Source to start ingesting!

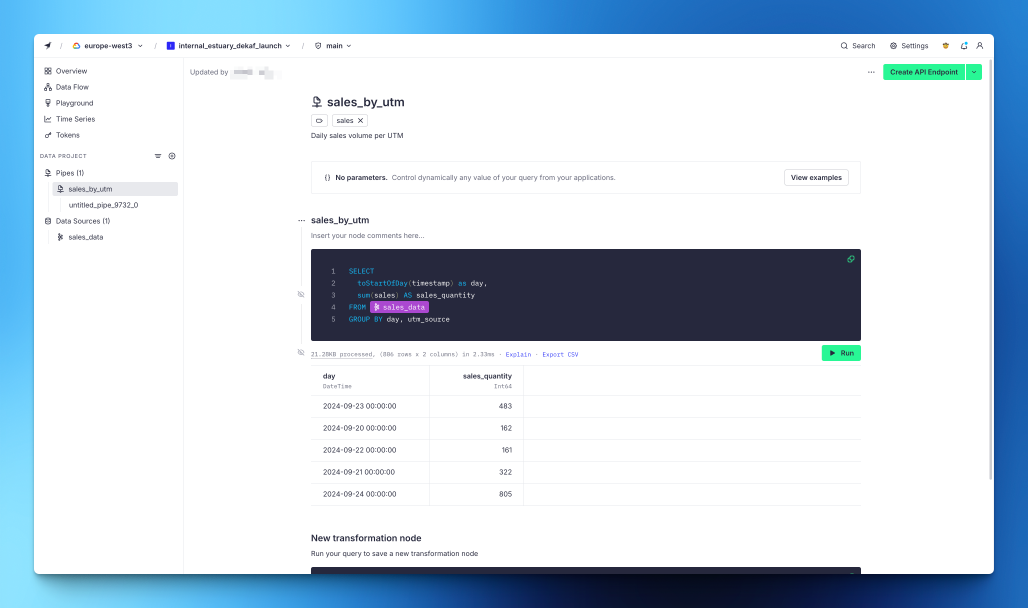

Now you can create a Pipe and start building real-time analytics from your Postgres CDC stream

Get started with Tinybird

Tinybird is the real-time platform for user-facing analytics. Unify your data from hundreds of sources (thanks, Estuary 😉), query it with SQL, and integrate it into your application via highly scalable, hosted REST API Endpoints.

You can sign up for Tinybird for free, with no time limit or credit card required. Need help? Join our Slack Community or check out the Tinybird docs for more resources.